Feedback for AI Systems: Importance and Improvement Techniques

In the fast-changing field of artificial intelligence, checking and giving feedback to AI systems is important for improving how well they work and how users experience them. This article explains the importance of feedback loops in machine learning and looks at different ways to collect information that improves AI skills. Learn how a strong feedback process can lead to ongoing improvement and tackle problems, making sure that AI systems do their job well and meet user needs and expectations.

Key Takeaways:

- 1 AI Feedback System Statistics

- 2 Types of Feedback for AI Systems

- 3 Importance of Feedback in AI Systems

- 4 Techniques for Collecting Feedback

- 5 Improvement Techniques for AI Systems

- 6 Challenges in Implementing Feedback

- 7 **Upcoming Trends in AI Feedback Systems**

- 8 Frequently Asked Questions

- 8.1 What is the importance of feedback for AI systems?

- 8.2 How does feedback improve AI systems?

- 8.3 What are some techniques for providing feedback to AI systems?

- 8.4 Why is it important to provide accurate and immediate feedback to AI systems?

- 8.5 How can feedback be used to improve AI systems for specific tasks?

- 8.6 Can feedback be used to prevent bias in AI systems?

Definition and Scope

AI feedback includes different methods and tools that help improve machine learning models by using information gained from how users interact with systems and how the systems perform.

Key types of feedback in reinforcement learning include positive reinforcement, where correct actions are rewarded, and negative reinforcement, which discourages poor actions.

In a game setting, an AI might earn points for completing a level (good) and lose points for actions that cause harm (bad).

Tools like OpenAI’s Gym offer a simulated setting where developers can test and improve their models quickly.

When actual users test the model’s performance, it provides essential feedback for improving algorithms and enhancing the system. This approach aligns with the principles outlined in our analysis of Feedback for AI Systems: Importance and Improvement Techniques.

Significance of Feedback in AI Development

Feedback is important in AI development because it improves user satisfaction and makes operations more efficient, leading to more dependable results.

For example, Amazon uses customer reviews to improve how it suggests products. By analyzing user ratings and purchase history, they adjust their suggestions to better match individual preferences.

In the same way, services such as Netflix use what viewers watch, look up, and rate to regularly improve their content recommendations. The feedback process improves how users engage with the system and leads to more users continuing to use it, showing the significance of user feedback in creating successful AI systems. Curious about how these systems enhance their algorithms based on user insights? Our detailed article explains the importance and effective techniques.

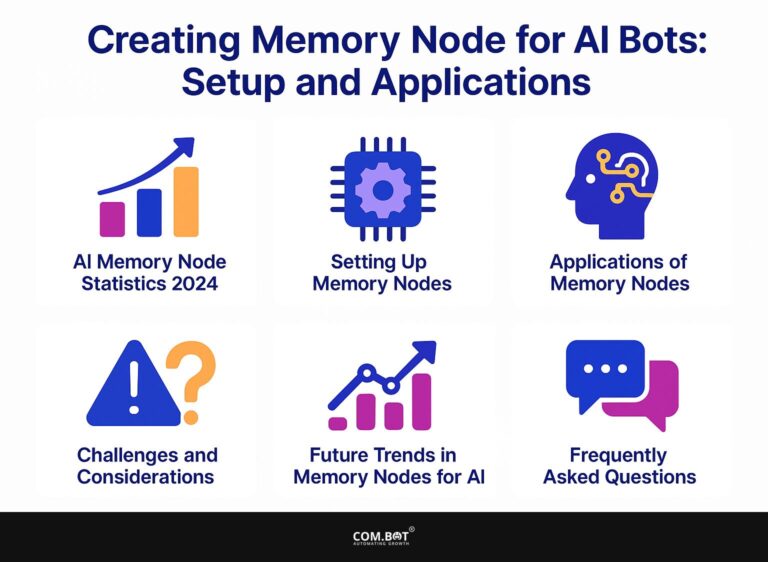

AI Feedback System Statistics

AI Feedback System Statistics

AI Feedback Analysis Benefits: AI Penetration in Tools

AI Feedback Analysis Benefits: Feedback Tool Impact

AI Feedback Analysis Advantages: Market Information

The AI Feedback System Statistics Discuss how AI tools improve feedback systems by enhancing user experience studies, decision-making, workflows, and market analysis. This data illustrates the growing importance of AI in enhancing feedback processes and the overwhelming positive reception among researchers.

AI Feedback Analysis Benefits indicate that 51% of UX researchers currently use AI tools, showing how AI is heavily used to improve feedback systems. Even more telling is that 91% of researchers are open to expanding their use of AI, which suggests widespread acceptance and acknowledgment of AI’s potential in improving research outcomes.

- Feedback Tool Impact: AI’s integration into feedback systems leads to 30% time saved in decision-making, demonstrating efficiency in data processing and analysis. AI-powered processes also help to a 40% reduction in operational tasks, easing the workload on researchers and streamlining processes.

Market Insights further emphasize AI’s value, with 90% of sentiment analysis contributing significantly to customer satisfaction. This explains how AI can accurately evaluate and improve user emotions, resulting in more positive customer interactions. Surveys gain significant advantages from AI, which offers pre-fill options that improve their efficiency. survey completion rates by 80%, reducing respondent fatigue and increasing feedback quality.

The AI Feedback System Statistics show how AI changes feedback systems to make them work better, improve satisfaction, and increase data quality. As researchers use AI more, the feedback systems will keep improving, offering more detailed information and better ways to make decisions.

Types of Feedback for AI Systems

AI systems can get better through various feedback sources, like human suggestions and machine processes, which both work to improve results. For those interested in delving deeper, our detailed exploration of feedback importance and improvement techniques offers valuable insights into how different types of feedback enhance AI efficiency.

Human Feedback

Feedback from individuals is important for AI systems. It is gathered from user interactions, surveys, and direct feedback, helping systems evolve based on real experiences.

To collect effective feedback, consider using Label Studio, which allows users to manage diverse feedback types and visualize responses easily.

To get clearer feedback, tools like SurveyMonkey can make focused surveys to check user opinions on certain parts of the AI’s performance. Implementing Net Promoter Score (NPS) surveys can offer a quick gauge of overall satisfaction.

Establishing metrics like response rate and average rating will help measure feedback effectiveness, ensuring the AI system continues to evolve with user needs.

Automated Feedback

Automated feedback systems, such as tools for assessing performance and spotting unusual patterns, allow AI systems to independently improve and evolve.

To set up automatic feedback successfully, use tools like Google Analytics to monitor how users interact and measure their involvement.

For example, set up goals to monitor conversion rates and user interactions on your platform. You can use machine learning tools like TensorFlow to study data patterns and find unusual behaviors.

Case studies, such as those from Netflix, demonstrate significant improvements in content recommendations and user retention through these techniques.

By constantly reviewing feedback, these systems improve their algorithms, resulting in better experiences for users and improved performance.

Peer Review Mechanisms

Peer review in AI involves evaluations by multiple people, which helps improve models and find errors through different viewpoints.

Platforms like GitHub offer excellent tools for structuring these peer reviews. Contributors can use pull requests to propose modifications, while issues provide a way to give feedback on certain parts of the code.

Code review comments serve as a mechanism for collective learning, often including recommendations for best practices. Using continuous integration tools like Jenkins helps with automatic testing, which makes sure that new changes don’t cause mistakes.

This structured method increases the model’s accuracy and encourages responsibility and progress among developers.

Importance of Feedback in AI Systems

Feedback in AI systems is important to improve accuracy and reduce biases, making sure the results are reliable and fair.

Enhancing Model Accuracy

Feedback can greatly improve the accuracy of models. For example, using user responses can increase the prediction ability of recommendation algorithms by up to 30%.

To implement effective feedback loops, fine-tune your models through various strategies.

Start by collecting qualitative feedback from users; tools like SurveyMonkey or Google Forms can facilitate this process.

Next, analyze user interactions-A/B testing different algorithm outputs can reveal which adjustments lead to better performance.

Spotify improves its recommendation system by analyzing the songs users add to playlists and the tracks they skip.

Regularly refresh your model with collected data, ensuring updates happen often to align with what users like.

Mitigating Bias and Errors

By implementing structured feedback loops, AI systems can reduce biases and errors, ensuring fairer outcomes in sensitive applications such as healthcare and finance.

For example, in healthcare, hospitals can collect feedback from diverse patient populations after AI-driven diagnoses. If the data shows discrepancies in treatment recommendations across demographic groups, teams can adjust algorithms accordingly.

An important example is IBM Watson for Oncology, which used feedback from doctors to make its cancer treatment advice better after facing criticism for being biased against some groups.

In finance, banks can often check loan decision results by asking for feedback from people who were denied loans. This can lead to updates that make credit scoring algorithms fairer.

Techniques for Collecting Feedback

Gathering helpful feedback is important for AI systems.

Methods include:

- User surveys

- Performance measurements

- Direct talks with users

User Surveys and Interviews

User surveys and interviews are essential methods for collecting detailed feedback, offering extensive information about user experiences and expectations.

To create useful user surveys, begin by outlining specific goals to identify the information you want to gather.

Use tools like SurveyMonkey, which offers templates and customizable questions, or Typeform for a more interactive approach.

After launching, review the data with tools in these platforms, like checking opinions on open-ended questions.

Find patterns or trends showing where users often face problems. Focus on useful findings that can directly guide improvements in products or development of new features.

Regularly reviewing and improving your survey process can help you adjust to changing user needs.

Performance Metrics Analysis

Performance metrics analysis looks at data results to evaluate AI performance, helping organizations make informed choices for improvements.

Key metrics to monitor include:

- Accuracy rates, which indicate how often the AI’s predictions align with true outcomes

- Response times, reflecting operational efficiency

For instance, tools like Google Data Studio can visualize these metrics, allowing teams to easily track trends over time.

Implementing A/B testing can help isolate variables that influence performance.

By regularly reviewing these metrics and revising your models, businesses can consistently improve AI solutions, ensuring they align with shifting business goals.

Improvement Techniques for AI Systems

Methods for enhancing AI systems emphasize repeated learning and using various data sources to improve how well models work and their reliability. For a deeper understanding, our comprehensive analysis on feedback mechanisms in AI systems explores vital techniques for enhancing model performance.

Iterative Learning Processes

Repeated learning methods use feedback to keep improving AI algorithms, resulting in better operational changes.

For example, companies such as Google and Netflix have used feedback loops effectively to improve user experience.

Google’s search system improves result accuracy by checking how often users click on links and how long they remain on a page. Similarly, Netflix analyzes viewer preferences and feedback to adjust recommendation algorithms, ensuring more relevant content is suggested.

To support these improvements, businesses can use tools such as Jupyter Notebooks for analyzing data and A/B testing systems, which help monitor changes and collect information effectively. This method repeatedly adjusts algorithms to better meet user requirements.

Incorporating Diverse Data Sources

Bringing in different data sources is needed to reduce model drift and improve AI performance, helping systems adjust to new patterns and user behaviors.

To achieve this, use a combination of organized and disorganized data. For instance, text from news articles, user-generated feedback from forums, and transactional data can provide a well-rounded view.

Use Amazon S3 to store these datasets, allowing easy access for training. Organize your storage with folders based on data type or source, ensuring efficient retrieval.

Refresh your datasets every three months to capture the newest trends. This will help your model stay accurate and useful.

Challenges in Implementing Feedback

Using feedback in AI systems can be difficult due to issues like data privacy and user expectations about how well AI works. For those looking to delve deeper, explore our comprehensive guide on the importance and techniques for improving feedback in AI systems.

Data Privacy Concerns

Collecting user feedback can lead to worries about data privacy. It’s important to have strong systems in place to protect private information and follow rules.

- To keep data private and follow GDPR rules, begin by removing identifying details from feedback so that individual users cannot be singled out.

- Implement secure data collection methods, like SSL encryption, to protect information in transit. Make your privacy policy clear and accessible, explaining how data will be used and stored.

- Use tools like Typeform or SurveyMonkey, which have built-in compliance features.

- Frequently train your team on data protection methods and perform audits to find possible weaknesses. These proactive actions can protect user data and build trust.

Managing User Expectations

It is important to manage what users expect to keep them happy; explaining how their feedback is used can build trust in AI systems.

-

To set realistic expectations, regularly update users on AI functionality.

-

For instance, establish a bi-weekly newsletter detailing improvements based on user suggestions.

-

Implement feedback loops where users can see how their input has directly influenced updates.

-

Consider using a transparent rating system to show how responses are generated, allowing users to understand the AI’s learning process.

-

Being transparent about the process builds trust and supports collaboration, making users feel valued and part of AI’s development.

**Upcoming Trends in AI Feedback Systems**

AI feedback systems will probably concentrate on better analysis methods and quicker feedback systems to help make better choices.

Organizations will rely more on tools such as Tableau to show data visually and Google Analytics to track website performance instantly. These platforms let teams track user interactions and quickly change strategies.

Including machine learning algorithms can improve feedback by forecasting user actions using past data. For example, using TensorFlow to analyze past trends can help adjust marketing campaigns on the fly, ensuring messages remain relevant and effective.

This mix of quick data and innovative analysis will change how strategies react, helping people make better decisions.

Frequently Asked Questions

What is the importance of feedback for AI systems?

Feedback is important for AI systems because it helps them learn and get better with experience. Without feedback, AI systems might keep making the same mistakes and struggle to handle new situations.

How does feedback improve AI systems?

Feedback helps AI systems fix errors and improve their algorithms. Feedback helps AI systems correct mistakes and improve their predictions and decisions over time.

What are some techniques for providing feedback to AI systems?

There are various techniques for providing feedback to AI systems, such as explicit feedback, implicit feedback, and reinforcement learning. Each technique has its own benefits and is used in different situations.

Why is it important to provide accurate and immediate feedback to AI systems?

Giving precise and fast responses to AI systems helps them learn the correct information and change their algorithms quickly. This results in better and more consistent performance over time.

How can feedback be used to improve AI systems for specific tasks?

Feedback can be used to improve AI systems for specific tasks by providing targeted information and evaluations for those specific tasks. This allows the AI system to focus on improving in areas where it may be lacking.

Can feedback be used to prevent bias in AI systems?

Yes, feedback can be used to prevent bias in AI systems by identifying and correcting any biased patterns in the data being used. Different types of feedback can make AI systems fairer and more balanced in how they make decisions.