Feedback for AI Systems: Importance and Improvement Techniques

In the fast-changing field of artificial intelligence, human feedback is essential for improving machine learning models, especially large language models created by companies like OpenAI and Amazon. This article highlights the importance of feedback in improving AI accuracy and user experience. We will look at practical ways to collect useful information and make changes, making sure that AI systems change to meet what users want and expect. Learn how reinforcement learning is changing AI feedback systems.

Key Takeaways:

- 1 Importance of Feedback in AI

- 2 AI Feedback System Statistics

- 3 Types of Feedback Mechanisms

- 4 Techniques for Gathering Feedback

- 5 Analyzing Feedback Data

- 6 Implementing Feedback for Improvement

- 7 Challenges in Feedback Implementation

- 8 Future Trends in AI Feedback Systems

- 9 Frequently Asked Questions

- 9.1 Why is feedback important for AI systems?

- 9.2 What are the benefits of receiving feedback for AI systems?

- 9.3 How does feedback contribute to the development of AI systems?

- 9.4 What are some techniques for providing effective feedback to AI systems?

- 9.5 Can feedback be used to prevent bias in AI systems?

- 9.6 How can AI systems be improved through feedback?

Definition and Purpose

AI feedback systems collect human input to improve AI algorithms so they meet user expectations.

To set up practical AI feedback systems, various tools can be used.

For example, Label Studio provides a strong platform for marking data, letting users sort and improve datasets using human feedback. Platforms like Amazon SageMaker facilitate model training with built-in feedback loops where stakeholders can review and rate AI predictions.

Using Google Cloud’s AutoML can make it easier to include feedback and allow for ongoing updates based on user responses as they occur. These tools work together to improve how AI works.

Role in AI Development

In AI development, user feedback is important for guiding changes and improving machine learning models consistently.

This cycle helps make the user experience better and increases the model’s accuracy as time goes on.

For example, in healthcare, patient results can be improved by using feedback from healthcare providers on diagnostic tools. Meanwhile, financial algorithms can be fine-tuned based on user input regarding market behavior.

Tools like SurveyMonkey or Google Forms make it easy for developers to collect organized feedback quickly.

By consistently reviewing this input, companies can make sure their AI systems grow with user needs.

Importance of Feedback in AI

Feedback systems are important for enhancing the accuracy and efficiency of AI systems, significantly improving user interactions. As mentioned in our detailed exploration of feedback improvement techniques, these systems can be optimized further to ensure maximum benefit.

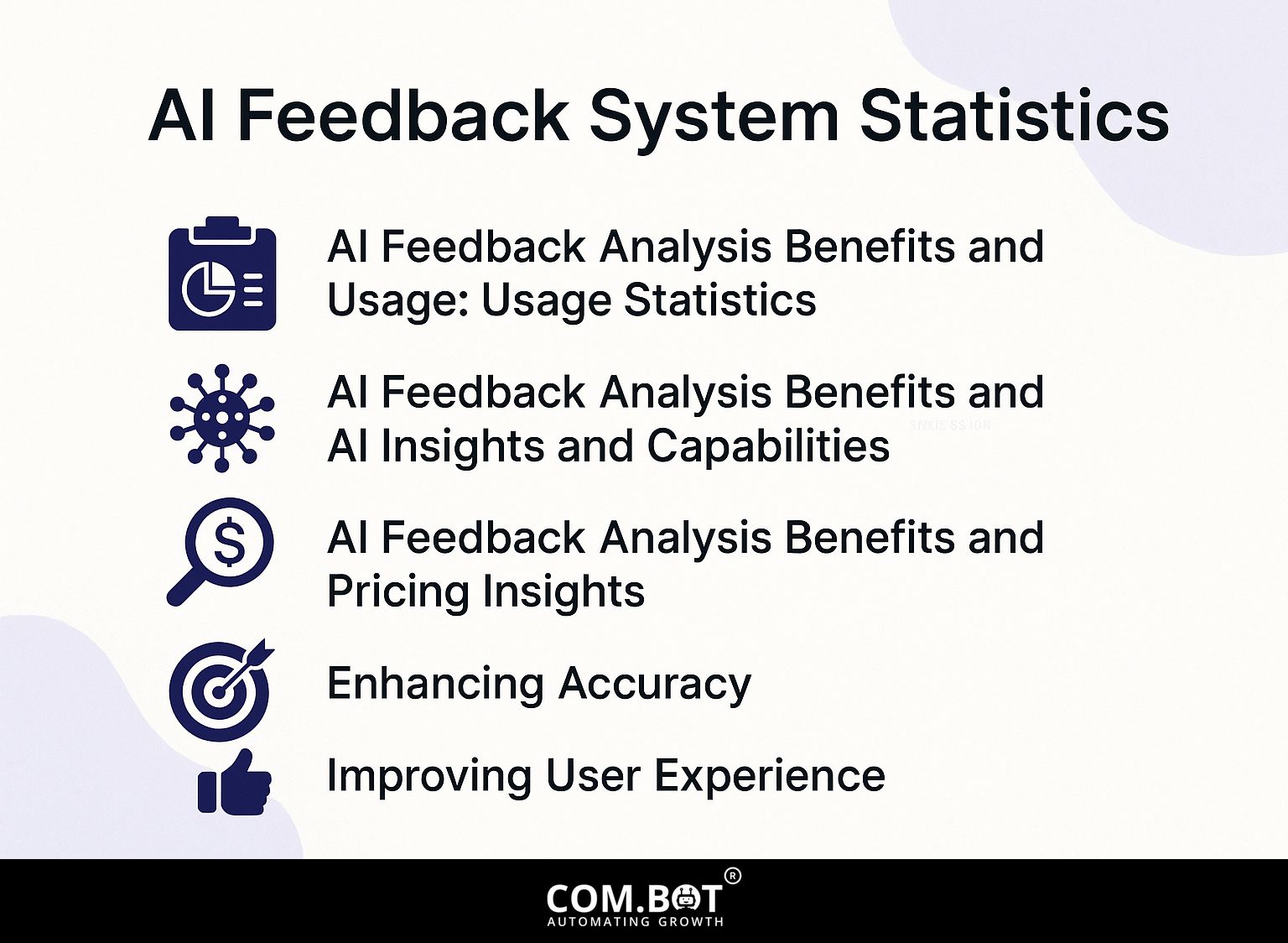

AI Feedback System Statistics

AI Feedback System Statistics

AI Feedback Analysis Benefits and Usage: Usage Statistics

Advantages of AI in Feedback Analysis and How It Is Used: AI Abilities

AI Feedback Analysis Advantages and Applications: Pricing Information

The data on AI Feedback System Statistics offers a clear view of how AI tools are increasingly used in feedback analysis, especially by UX researchers, and explains how pricing works for different AI feedback platforms. This information is important for grasping the changing ways in which AI is changing research methods and improving data management.

Usage Statistics reveal that 51% of UX researchers are using AI tools, indicating significant trust and reliance on AI for enhancing research quality and efficiency. The high 91% open rate for research expansion emphasizes the readiness to work with AI-driven information, indicating that AI tools help expand and improve research methods. Additionally, 30% use of automatic interview transcription reflects the growing efficiency AI brings to traditionally time-consuming tasks, supporting researchers in focusing on higher-level analysis.

- AI Insights and Capabilities: The impressive 90% accuracy in sentiment analysis highlights AI’s skill in recognizing subtle human emotions, which is important for detailed feedback analysis. Furthermore, the 80% reduction in theme detection time demonstrates AI’s ability to simplify and speed up data processing, allowing quicker decisions based on information. Improvement in user satisfaction by 43% Improved data handling shows AI’s role in providing more reliable and useful feedback.

Pricing Insights provide a comparative look at the costs associated with various AI feedback platforms. BuildBetter.ai has a monthly cost of $200 This likely includes advanced features designed for large research projects. Zonka Feedback and Qualaroo offer more affordable pricing at $49 and $19.99 respectively, indicating options for smaller businesses or those with limited budgets, each catering to different segments of the market.

Overall, the AI Feedback System Statistics demonstrate the significant impact AI tools have on improving research quality and efficiency. More organizations are using AI because it provides better data analysis and flexible pricing options, making it an important tool for analyzing feedback and meeting different business needs.

Enhancing Accuracy

Using feedback well can improve AI accuracy by up to 30%, allowing systems to learn from past mistakes and user interactions.

For example, with self-driving cars, systems such as Waymo use live feedback to quickly change direction. Studies found that when drivers identified errors in directions, the AI fixed them and made fewer mistakes later.

Programs such as supervised learning algorithms can analyze driver input to make better choices in decision-making. Getting feedback from users helps AI systems to keep improving, making driving safer and more reliable.

This method improves accuracy and increases user confidence in self-driving technologies.

Improving User Experience

Regularly adding feedback makes user experience significantly better, often increasing user satisfaction scores by 40%.

For example, businesses using chatbots can collect information from user conversations to improve replies. Employ tools like Google Analytics to track user engagement and sentiment analysis tools such as MonkeyLearn to interpret feedback.

Over time, analyzing these metrics enables businesses to identify common pain points, leading to targeted updates in chatbot programming. Testing various ways people use the chatbot can make it better by finding the most effective methods and helping it grow to meet customer needs.

Types of Feedback Mechanisms

AI systems use various feedback methods to improve how models work, each with its own specific role. If you’re curious about how these techniques enhance AI performance, our analysis provides in-depth insights.

User Feedback

User feedback is often gathered through structured methods such as surveys or direct conversations, providing important qualitative information.

To gather user feedback quickly, try Typeform for surveys that you can change easily, or Google Forms for basic data collection.

Use short, focused questions to improve response rates-multiple-choice and open-ended formats are effective.

Regularly review feedback to find trends and useful information that can help improve the product. Talking directly to individual users can provide more detailed knowledge.

Regularly collecting and reviewing feedback makes sure that user opinions are always considered in your development process.

Automated Feedback Loops

Systems that automatically provide feedback use algorithms to regularly monitor operations and adjust them immediately, enhancing flexible learning.

These loops are particularly evident in AI-driven recommendation engines like Netflix and Spotify. For instance, Netflix analyzes user viewing habits to suggest movies based on past preferences and ratings.

If you watch and rate action films highly, Netflix will promote similar titles. This involves monitoring user actions and adjusting settings to provide better recommendations over time.

Tools like Google Analytics can examine how users interact with content, enabling businesses to adjust their content strategies based on live data.

Techniques for Gathering Feedback

There are many useful methods for collecting feedback, each designed for particular situations and goals.

Surveys and Questionnaires

Surveys and questionnaires remain one of the most reliable methods for collecting user feedback, with response rates averaging around 30%.

To design effective surveys, prioritize concise and clear questions. Use platforms like SurveyMonkey or Google Forms, which provide easy-to-use templates.

Keep the survey length under 10 questions to maximize engagement, as longer surveys often lead to drop-offs. Include multiple-choice questions for quick answers and open-ended questions for detailed responses.

For example, ask “What features do you value most?” to gather qualitative feedback. Customizing questions to fit the audience’s characteristics will improve the quality of responses.

Usability Testing

Usability testing involves direct observation of users interacting with AI systems, providing qualitative data that can significantly inform improvements.

For successful usability tests, follow these steps:

- First, select participants that represent your user base. Aim for 5-10 individuals to gather diverse feedback.

- Next, create realistic scenarios that mimic actual tasks users would perform.

- Use tools like UserTesting or Lookback to record sessions and gather detailed information.

- Set specific measurements for success, like how many tasks are finished and the time taken to complete them, to measure how easy something is to use and find areas to improve.

- Look at the results to prioritize changes that improve user experience.

Analyzing Feedback Data

Looking at feedback helps make AI algorithms better by seeing how users engage with them. To dive deeper into these improvement techniques, check out our comprehensive guide on the importance of feedback for AI systems.

Qualitative vs. Quantitative Analysis

Qualitative analysis looks at how users feel, while quantitative analysis gives numbers to evaluate performance. Both are important for a complete view.

In a chatbot project, feedback was collected through user interviews and open-ended surveys, letting developers understand user feelings and likes.

For instance, one user expressed frustration over long response times, highlighting a clear area for improvement.

Quantitative analysis complemented this by tracking metrics such as response accuracy and completion rates, revealing that the bot had a 75% resolution rate.

By using these methods together, the team could focus on improvements that dealt with user issues and made things work better overall.

Identifying Patterns and Trends

Finding patterns and trends in feedback data can show important information about how users act and what they like, helping to make specific changes.

One effective method for analyzing user feedback is utilizing statistical tools like Tableau. For example, after analyzing feedback from an AI app, a trend showed that users struggled with onboarding.

By visualizing this data, the team identified that 40% of users dropped off during the tutorial phase. They made the onboarding process simpler by adding interactive parts and cutting down on the number of steps.

Changing this approach resulted in a 25% rise in users staying with us over three months, showing how practical information can lead to big progress.

Implementing Feedback for Improvement

Using feedback well is important for ongoing improvements in AI systems, often requiring several rounds of development.

Iterative Development Processes

Using repeatable development steps, AI systems improve by using user feedback to make each version better, which increases how well they work.

For example, tools like JIRA help teams follow user problems, set priorities for improvements, and make updates quickly.

A clear example of success is the progress of ChatGPT; early user responses showed the need for better comprehension of context. Subsequent updates focused on refining conversation flow, resulting in a significantly more coherent interaction.

By using user stories to direct development, teams can make sure that each update solves current issues and plans for upcoming user needs.

Case Studies of Successful Implementations

Case studies from leading companies illustrate how effective feedback implementation can lead to significant advancements in AI functionalities and user satisfaction.

For example, Adobe improved its feedback system by using the Qualtrics XM platform to collect user opinions. After integrating user suggestions into Adobe XD, they saw a 30% increase in user engagement.

Microsoft used UserVoice to collect user feedback for their Azure services, leading to a 25% drop in support tickets thanks to better features.

These examples show how using the right feedback tools, along with a responsive development method, can greatly improve products and customer satisfaction.

Challenges in Feedback Implementation

Setting up systems to gather feedback can be difficult. Problems can arise from biased data collection and technical issues that reduce how well the system works.

Bias in Feedback Collection

Bias in feedback collection can lead to skewed data, impacting AI performance negatively by reinforcing existing cognitive biases.

To minimize these biases, implement diverse feedback collection methods. For example, collect a range of opinions by using anonymous surveys and open discussions.

Tools like SurveyMonkey enable you to design inclusive surveys that reach a wide audience. Consider switching the feedback team members to gain fresh viewpoints and prevent everyone from thinking the same way.

Analyze the collected data for patterns indicative of bias, using software like Tableau to visualize results effectively. This method improves the quality of feedback and provides a fair view of user opinions.

Technical Limitations

Technical limitations, such as insufficient data processing capabilities, can hinder the effectiveness of feedback systems in AI.

To address these challenges, consider implementing cloud-based processing solutions like Amazon Web Services (AWS) or Google Cloud Platform (GCP). These platforms provide resources that can change according to your processing requirements.

For instance, using AWS Lambda can enable real-time data processing without the need for extensive on-premises hardware. Setting up microservices makes it easy to manage feedback systems. You can connect and change parts easily as needs change.

Future Trends in AI Feedback Systems

AI feedback systems are set to grow, especially with progress in machine learning and customization features that improve flexibility. To explore how these systems can be further enhanced, see also Feedback for AI Systems: Importance and Improvement Techniques, which delves into crucial strategies for refining and optimizing AI feedback mechanisms.

Integration with Machine Learning

Linking feedback systems with machine learning can significantly improve how algorithms modify themselves, enabling updates based on real-time user data.

For example, in the retail industry, businesses can use customer feedback from surveys and online reviews, putting this information into machine learning models.

These models then analyze patterns, adjusting inventory and marketing strategies accordingly.

In healthcare, patient feedback about their treatment experiences can improve predictive analytics, leading to better patient care results.

Popular tools like Amazon SageMaker and Google Cloud AutoML make this process easier by offering tools to train models quickly.

By regularly using feedback from users, businesses improve how quickly they respond and the quality of their service.

Personalization and Adaptability

As AI systems improve, personalizing them and making them user-friendly will be essential for increasing user engagement and satisfaction.

User-centered design plays a critical role in realizing this potential. By involving users directly in the development process, designers can learn important details about what users like and how they act.

For example, tools like UserTesting let developers collect feedback from actual users, helping them build AI experiences that match personal likes. Using learning algorithms that change AI responses based on how each user interacts can make the responses more suitable.

This method builds a closer relationship between users and the technology, leading to higher satisfaction and involvement.

Frequently Asked Questions

Why is feedback important for AI systems?

Feedback is important for AI systems because it allows for continuous improvement and learning. Without feedback, AI systems wouldn’t be able to learn and improve their predictions or decisions.

What are the benefits of receiving feedback for AI systems?

Receiving feedback for AI systems can help improve their accuracy, efficiency, and overall performance. It also allows for identifying and fixing any errors or biases in the system.

How does feedback contribute to the development of AI systems?

Feedback is essential for improving AI systems because it gives important information for continued training and improvement. It also helps to identify areas where the system needs improvement.

What are some techniques for providing effective feedback to AI systems?

Some techniques for providing effective feedback to AI systems include using clear and specific examples, providing a variety of data, and ensuring the feedback is timely and consistent.

Can feedback be used to prevent bias in AI systems?

Yes, feedback can be used to prevent bias in AI systems. By consistently getting feedback and fixing biases or mistakes, AI systems can make decisions that are more fair and impartial.

How can AI systems be improved through feedback?

AI systems can be improved through feedback by using the data to train and update the system’s algorithms, identifying and addressing any errors or biases, and continuously monitoring and adjusting the system based on the feedback received.