Feedback for AI Systems: Importance and Improvement Techniques

In the fast-changing field of artificial intelligence, human input is essential for improving machine learning models. OpenAI and Label Studio highlight the importance of human skills in improving AI systems using methods like reinforcement learning. This article examines why feedback is important in developing AI. It offers ideas for strategies to improve performance and decrease biases. Learn how using user feedback can make AI a more dependable tool.

Key Takeaways:

- 1 Importance of Feedback for AI Systems

- 2 Types of Feedback Mechanisms

- 3 Techniques for Collecting Feedback

- 4 AI Feedback Systems Analysis

- 5 Analyzing Feedback Data

- 6 Implementing Feedback for Improvement

- 7 Case Studies and Examples

- 8 Future Trends in AI Feedback Mechanisms

- 9 Frequently Asked Questions

- 9.1 What is the importance of feedback for AI systems?

- 9.2 How does feedback contribute to the improvement of AI systems?

- 9.3 What are some techniques used to gather feedback for AI systems?

- 9.4 Can feedback improve the ethical implications of AI systems?

- 9.5 How can AI systems improve with feedback?

- 9.6 Do AI systems need feedback?

Definition of Feedback

In AI, feedback is the information given to a model that helps it change and make its predictions or results better.

In supervised learning, feedback helps fix mistakes in the model. For instance, when training a model to recognize images, a labeled dataset provides explicit feedback-indicating whether the model’s classification is correct or not.

Tools like TensorFlow and PyTorch facilitate this process by allowing developers to implement loss functions that quantify error. Models use methods like gradient descent to decrease errors, adjusting predictions repeatedly to improve accuracy over time.

This structured feedback process is important for creating reliable AI systems that can provide trustworthy predictions.

Role of Feedback in AI Development

Feedback improves the training of AI models, helping them learn from both successful and unsuccessful experiences using reinforcement learning methods.

In practice, this means that models like OpenAI’s GPT improve how they work based on how people use them.

When a user modifies an answer given by the system, the model can use this information to make its answers better later on.

This repeated process uses algorithms to review successful interactions and change settings, which helps improve content creation.

Developers can set up feedback loops with tools like TensorFlow or PyTorch, allowing them to add human input to their automated systems for continual enhancement.

Importance of Feedback for AI Systems

Allowing users to communicate their ideas with AI systems greatly improves how these systems work and meet user requirements. For those interested in exploring more about how feedback can refine AI processes, our deep dive into feedback improvement techniques provides valuable insights.

Enhancing Performance and Accuracy

Effective feedback can improve AI performance by over 30%, as seen in machine learning models used in healthcare diagnostics.

For instance, utilizing user feedback mechanisms, such as surveys or direct input, allows developers to fine-tune algorithms based on real-world experiences.

A case study from a major healthcare provider showed that by implementing feedback loops in their diagnostic AI, they reduced error rates by 25%. Tools such as Google Forms for surveys or platforms like UserVoice for direct feedback can be very helpful in this process.

Focusing on helpful feedback and analysis helps organizations keep improving, leading to better results in important areas.

Identifying Bias and Ethical Concerns

Feedback systems play a key role in finding and fixing biases in AI, which helps to promote fair development practices.

To put feedback systems into action effectively, consider using tools like Label Studio for marking data, which can help make sure your datasets are varied and inclusive.

Establish a regular review cycle that includes stakeholder feedback, allowing users to report perceived biases in AI predictions. For example, after putting a model in place, use surveys or focus groups to collect feedback on how well it is working.

By using these methods together, you can build a stronger plan to find and reduce bias before it affects your system.

Types of Feedback Mechanisms

AI systems use different feedback methods, including human involvement and automatic feedback cycles, to improve their results. For a deeper understanding, see our related insight: Feedback for AI Systems: Importance and Improvement Techniques.

Human-in-the-Loop Systems

Human-in-the-loop systems use human input to improve AI training, allowing for immediate adjustments and improvements.

This approach makes sure that AI models learn from data while changing according to human guidance. For instance, in autonomous vehicles, operators might intervene during complex scenarios, helping the system learn appropriate responses.

Tools like Amazon SageMaker offer ways to include human feedback effectively, enabling developers to adjust models more easily. Using platforms such as Snorkel facilitates the labeling of training data, enabling rapid adjustments based on real-world outcomes.

Implementing these methods can significantly improve AI accuracy in various applications.

Automated Feedback Loops

Automatic feedback systems use performance data to update AI models all the time without human intervention.

In reinforcement learning, for instance, an AI agent plays a game and receives rewards or penalties based on its actions. This feedback helps it adjust its strategy.

For example, when training a game AI like AlphaGo, the model improves its choices based on the results of each game. Tools like TensorFlow or PyTorch enable developers to implement these feedback mechanisms, allowing AI to learn from simulated environments or real-world data.

By studying performance data over time, models get better at reaching goals and adjusting their algorithms on their own.

Techniques for Collecting Feedback

Getting feedback is important for making AI better by asking users questions and checking how well it performs. This aligns with the principles outlined in our analysis of Feedback for AI Systems: Importance and Improvement Techniques, where various strategies are explored.

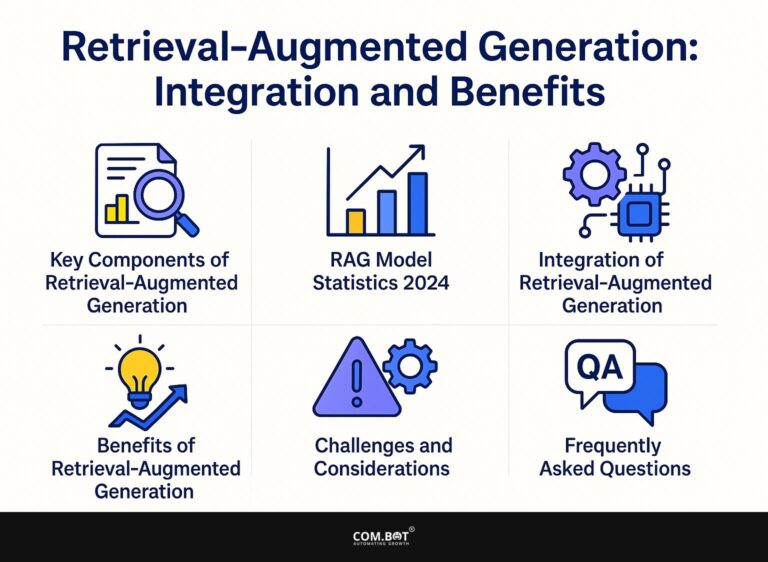

AI Feedback Systems Analysis

AI Feedback Systems Analysis

AI Impact on Feedback Analysis: AI Feedback Analysis Benefits

AI Impact on Feedback Analysis: AI in Customer Service

The AI Feedback Systems Analysis shows a detailed view of how artificial intelligence is changing feedback analysis and customer service. The data highlights significant improvements in efficiency, customer satisfaction, and workload management through the integration of AI technologies.

AI Impact on Feedback Analysis reveals substantial benefits. The implementation of AI in feedback analysis has led to a 90% reduction in analysis time Speeding up how quickly we learn from customer feedback. This increase in efficiency helps companies respond faster to customer demands and shifts in the market. Also, using AI has led to a 9.44% increase in Customer Satisfaction (CSAT) AI can improve how businesses engage with customers and provide services.

- Ticket Reduction using AI: The data indicates a 50% reduction in support tickets when AI is used. This significant decrease translates into less strain on customer service teams and suggests that AI effectively resolves issues before they escalate into formal complaints.

- Customer Interaction Evaluation Rate: With a 100% evaluation rate Using AI-based Quality Assurance (QA), every customer interaction is reviewed to maintain service quality and find ways to improve. This detailed review can result in specific training and improved performance, which increases customer satisfaction.

AI in Customer Service highlights how AI helps with customer service issues. AI systems are currently handling 80% of the customer service workload, which alleviates pressure on human agents and allows them to focus on more complex or sensitive issues. Platforms like Zendesk have managed 18 billion AI training data interactions AI is being developed on a large scale to learn and react to what customers need in a useful way.

In summary, the AI Feedback Systems Analysis demonstrates how AI changes feedback analysis and customer service AI helps businesses improve productivity, satisfy customers, and manage tasks effectively. It is proving to be a helpful tool for making customer service operations run smoothly and offering great customer experiences.

User Surveys and Interviews

User surveys and interviews give important information that improve AI model training and help users.

To create successful surveys, begin by clearly stating what you want to achieve; this will help you create your questions. Ask open-ended questions to get detailed feedback, like “Which features do you find most useful?”

For participant selection, target a diverse group using platforms like social media and user forums. A recommended tool for this is SurveyMonkey Pro, priced at $25/month, which allows for customizable templates and analytics to track responses.

Using these strategies can greatly increase your knowledge.

Performance Metrics and Analytics

Using performance metrics and analytics enables AI developers to track system behavior and user interactions over time.

Important aspects to focus on are accuracy, exactness, and recall. These indicate how well your models are working.

Using tools like Mixpanel, which costs $89 per month, can improve your analysis with funnel reports and cohort analysis.

By using A/B testing tools like Optimizely, you can experiment with different algorithms or features to observe their impact on user interaction.

Setting clear goals for each measurement will help your evaluations lead to real improvements and better performance in your AI systems.

Analyzing Feedback Data

Effectively examining feedback data can separate useful information from irrelevant details, helping improve AI.

Qualitative vs. Quantitative Analysis

Qualitative analysis captures user sentiment while quantitative analysis provides measurable data to inform AI adjustments.

To use both methods well, begin with a qualitative review by gathering user opinions through surveys or social media. Use tools like SurveyMonkey or Google Forms to make detailed questions that find out what users think.

To get numerical data, use tools like Google Analytics to monitor how users interact with your site, including the number of page visits and how often users leave the site quickly. This information helps you adjust your AI systems quickly, ensuring they satisfy user requirements and performance goals.

Combining emotions with clear facts gives a complete view of user experiences.

Tools and Techniques for Analysis

Using the right tools for data analysis can make the feedback process more efficient, leading to better AI performance.

Consider using tools like Tableau for data visualization, which starts at $70/month, allowing for intuitive dashboards that reveal trends at a glance.

For detailed statistical analysis, R is a great choice and is free to use, offering many libraries for different calculations.

Google Analytics can monitor how users engage with a website, offering important data on how often users interact.

Using these tools together lets you quickly go through feedback to find areas where AI needs improvement, helping you make informed choices to improve performance.

Implementing Feedback for Improvement

Effectively using feedback in AI models is important for constant improvement and flexibility in different situations.

Iterative Development Processes

Updating development steps regularly allows us to adjust quickly based on user feedback, improving AI performance.

Scrum is a helpful method that focuses on short cycles called sprints, usually lasting two to four weeks.

During each sprint, teams set clear goals, hold daily meetings to check progress, and collect user feedback after release.

Key milestones include sprint planning, review, and retrospective meetings, allowing teams to reflect on what worked and what can improve.

For instance, if a feature isn’t well-received, adjustments can be made in the next sprint, ensuring that the product aligns closely with user needs.

Feedback Integration Strategies

Using effective methods to include feedback helps AI systems stay responsive and meet user needs.

To achieve this, regularly review user interactions and analytics to identify trends and pain points. Look at tools such as Google Analytics to learn how users interact with your site, or SurveyMonkey to gather feedback directly from users.

Schedule bi-weekly review meetings where your team discusses this data, prioritizing actionable changes. To manage projects, use Jira to monitor feedback progress, starting at $10/month.

Document the changes and let users know about updates. This helps you keep improving your AI system to match what they need.

Case Studies and Examples

Looking at examples of how feedback is used in practice offers helpful information about what works well and what could go wrong (our article on Feedback for AI Systems discusses key improvement techniques that are crucial for effective application).

Successful Feedback Implementations

Effective feedback methods in AI systems show the strength of focusing on users and gradual improvement.

Netflix looks at what people rate and watch to offer them custom recommendations. This system uses tools such as Apache Kafka for handling data as it comes in and TensorFlow for teaching computers to learn from data.

As a result, Netflix reported a 75% success rate in content recommendations, significantly enhancing user engagement and satisfaction.

Companies wanting to do this can begin by setting up a strong system to gather feedback, using tools such as Google Analytics or Mixpanel to monitor how users engage, and gradually improving their algorithms based on user comments.

Lessons Learned from Failures

Recognizing errors can show us the issues with incorporating feedback into AI systems.

Case studies often reveal common pitfalls that lead to ineffective feedback mechanisms. For example, in a project aimed at improving a recommendation engine, bad data quality was a big problem. The team depended on user ratings, which were too few to produce useful results.

Another issue came from a lack of user engagement, where users were not incentivized to provide feedback, rendering the system’s input unreliable.

To avoid these failures, teams should prioritize high-quality data collection and implement user-friendly feedback channels, such as short surveys or gamified feedback systems, encouraging participation.

Future Trends in AI Feedback Mechanisms

AI feedback systems are expected to change a lot with new technology and improved ethical guidelines. To enhance these systems further, exploring the importance and improvement techniques for AI feedback could provide valuable insights.

Advancements in Real-Time Feedback

Real-time feedback systems are helping AI models change quickly, improving how well they work.

By using tools like Amazon SageMaker Ground Truth or DataRobot, organizations can apply flexible labeling methods that help quickly modify training data.

For example, using SageMaker, teams can automatically label data, allowing AI to improve its algorithms based on user interactions in real time. This creates a loop where the AI continues to learn from data with labels and data without labels, improving its ability to make decisions.

Platforms like Kintone allow for rapid collection of user opinions. This gives immediate information that improves AI learning.

Ethical Considerations in AI Feedback Systems

Dealing with ethical issues in AI feedback systems is essential to keep things fair, open, and responsible.

To reduce bias and build user trust, follow these best practices:

- Diversify your feedback sources. Involving different user groups provides more diverse viewpoints.

- Collect data without revealing user identities to create a secure space for truthful feedback.

- Frequently review feedback systems with tools such as the Algorithmic Accountability Framework to identify bias.

Clearly express how user feedback shapes AI development; being open creates trust and motivates involvement in upcoming feedback efforts.

Frequently Asked Questions

What is the importance of feedback for AI systems?

Feedback for AI systems is important because it makes the system more accurate and better at its tasks. It enables the system to learn from errors and improve its predictions later.

How does feedback contribute to the improvement of AI systems?

Feedback helps identify errors and biases within the system, allowing for continuous improvements and updates. It also helps to train the system on new data, improving its accuracy and overall performance.

What are some techniques used to gather feedback for AI systems?

Some common techniques for gathering feedback include user surveys, user testing, and monitoring user interactions with the system. Machine learning algorithms can also process and add feedback data to the system.

Can feedback improve the ethical implications of AI systems?

Yes, feedback can help address and improve the ethical implications of AI systems. By continuously gathering feedback and monitoring the system’s performance, biases and ethical concerns can be identified and addressed, leading to more ethical and responsible AI systems.

How can AI systems improve with feedback?

Feedback helps AI systems work faster, make fewer errors, and improve user interactions. It also supports ongoing learning and adjustment to fresh data and shifts in user behavior.

Do AI systems need feedback?

Yes, feedback is important for the ongoing improvement and growth of AI systems. Without feedback, the system may become outdated and unable to meet new situations or user needs.