Com.bot Chatbot A/B Testing

“These days, making your chatbot work better isn’t just helpful — it’s key to keeping users engaged and giving your sales a real boost.”

A/B testing for chatbots offers a powerful way to evaluate different chat-flow variants, helping businesses understand what works best for their audience.

This guide covers the fundamentals of A/B testing for chatbots, including its importance, step-by-step methodology, benefits, best practices, and common pitfalls to avoid.

Whether you’re looking to improve user experience or boost conversion rates, mastering A/B testing is essential.

Key Takeaways:

- A/B testing for chatbots is a process of comparing different chat-flow variants to improve user experience and increase conversion rates.

- Running A/B tests for chatbots involves setting goals, making different versions, directing visitors, and checking conversion rates.

- Testing chatbots with A/B methods helps make user interactions better, boosts the number of successful actions users take, provides helpful information, and reduces time and effort spent.

- 1 What is A/B Testing for Chatbots?

- 2 How Does A/B Testing Work for Chatbots?

- 3 Why is A/B Testing Important for Chatbots?

- 4 How to Run A/B Testing for Chatbots?

- 5 What are the Benefits of A/B Testing for Chatbots?

- 6 What are the Best Practices for A/B Testing for Chatbots?

- 7 What are the Common Mistakes in A/B Testing for Chatbots?

- 8 Frequently Asked Questions

- 8.1 1. What is Com.bot Chatbot A/B Testing?

- 8.2 2. How does Com.bot Chatbot A/B Testing work?

- 8.3 3. Why is A/B Testing important for chatbots?

- 8.4 4. Can I run multiple A/B tests simultaneously on Com.bot?

- 8.5 5. Do I need coding skills to use Com.bot Chatbot A/B Testing?

- 8.6 6. How can Com.bot Chatbot A/B Testing benefit my business?

What is A/B Testing for Chatbots?

A/B testing for chatbots is a way to compare different versions of chatbot conversations to see which one engages users and converts better. This approach helps developers make decisions based on data by examining user responses and feedback, leading to a better user experience and improved chatbot function.

By using A/B testing, businesses can adjust their chatbot methods to meet targets like getting leads or providing customer help, while using advanced data analysis and machine learning.

How Does A/B Testing Work for Chatbots?

A/B testing for chatbots works by comparing different chatbot setups to see which one gives better user satisfaction and engagement. The process splits user traffic randomly between the versions, collects data on their interactions, and uses statistical analysis to find which chatbot conversation works best.

This method allows developers to apply both computer-driven and hands-on testing techniques to carefully examine and address any mistakes that occur during the tests.

Why is A/B Testing Important for Chatbots?

A/B testing is important for chatbots because it helps evaluate the success of different conversation styles, which improves user interaction and performance.

By studying how users interact, feedback can be gathered, enabling developers to continuously improve chatbot replies. This ongoing process aids in generating leads and enhancing customer communication, while advancements in AI and natural language processing greatly improve user experience.

Our insights into related applications, such as Com.bot’s Abandoned Cart Recovery Bot, further illustrate these enhancements.

How to Run A/B Testing for Chatbots?

Testing chatbots with A/B testing involves a step-by-step approach to get trustworthy results. Begin by setting specific goals, such as increasing interaction or making customer support better.

Then, developers create different versions of the chat-flow and direct traffic evenly to each version to gather user responses, analyze conversion data, and assess test results.

By using this method, businesses can pinpoint which chatbot version improves user experience and achieves the desired goals.

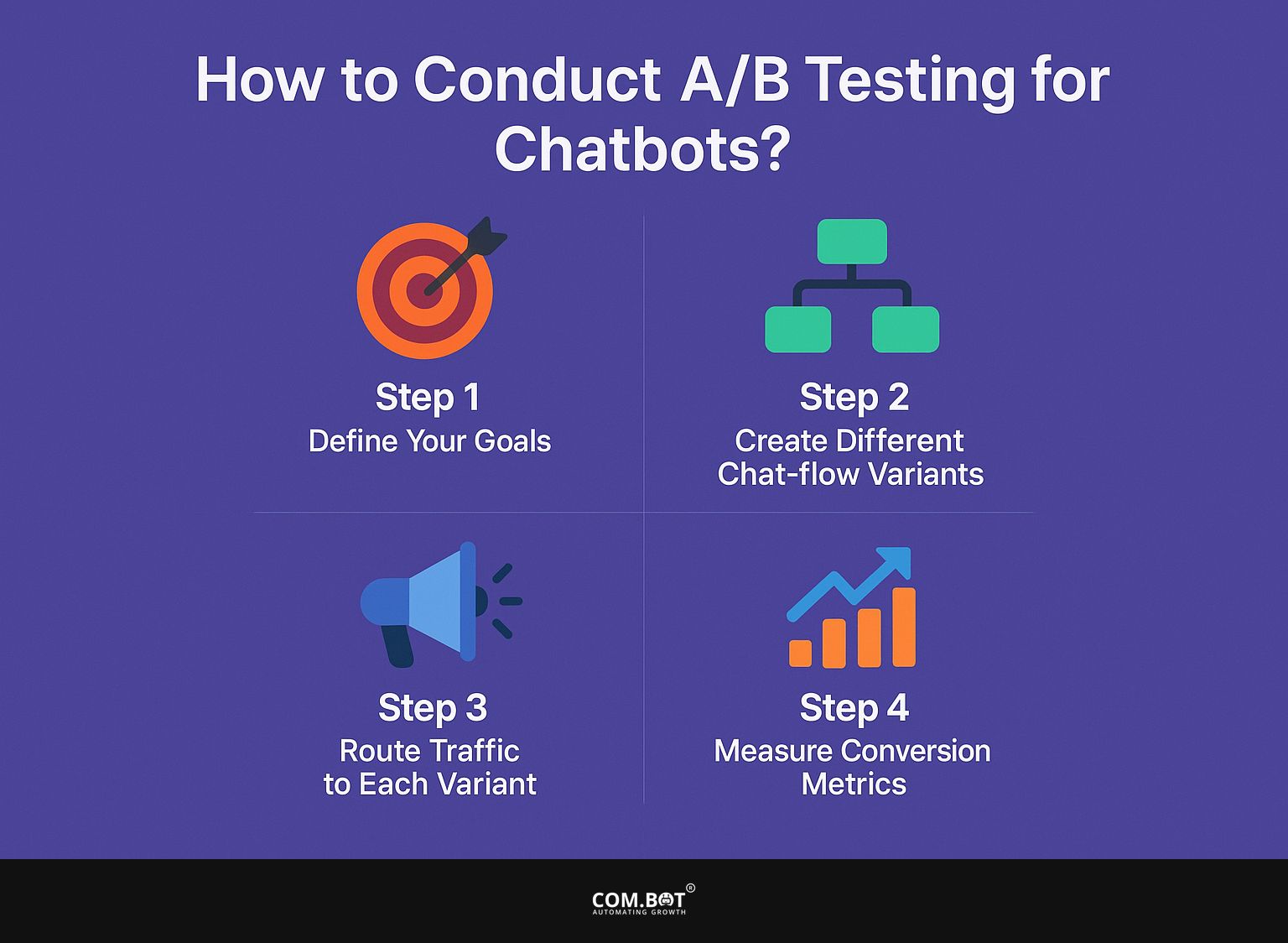

Step 1: Define Your Goals

Setting clear goals is the first and most important step in carrying out A/B testing for chatbots, as it lays the groundwork for all testing that follows. Goals might include making the user experience better, increasing conversion rates, or improving how customers communicate with the chatbot system. Setting clear, measurable goals helps developers assess how well different chat-flow versions work and confirm that the testing matches overall company plans.

For instance, when setting objectives for A/B testing, developers should consider creating distinct metrics such as response time, user satisfaction scores, and dropout rates during interactions.

These metrics help evaluate how well each chatbot version works and give useful information about how users interact with them. It’s important to connect these goals to the company’s overall plans. For instance, if the goal is to increase sales through generating leads, it’s essential to track how many users finish the sales process after using a specific chatbot version.

Aligning objectives with audience needs raises the likelihood of achieving significant outcomes.

Step 2: Create Different Chat-flow Variants

Having different chat-flow options is important for evaluating how well chatbots work. It lets developers try out various ways of designing conversations and interacting with users. These versions should focus on identifying user goals and examine the specifics of user emotions and background clues.

Personalization is important because it allows the chatbot to give specific replies that connect well with users. Including helpful keywords and topics is intended to make exchanges more relevant and clear, simplifying conversations.

By using different methods in chat-flow design, businesses can successfully understand user preferences, increase interaction, and improve satisfaction and retention rates.

Step 3: Route Traffic to Each Variant

Directing traffic correctly to each chat-flow version is a key part of A/B testing for chatbots. It makes sure that user interactions are spread out evenly among the different versions.

By using advanced algorithms and strategies for flexible distribution, developers can improve their method to keep users interested. This increases the efficiency of each version and improves the user experience by reducing delays and speeding up response times.

Data collection is important because it helps analyze how users act and what they like. Data from this study can guide gradual changes, making the chatbot simpler to use and clearer.

The main aim is to develop a smooth interaction process that meets users’ particular needs, increasing their happiness and involvement.

Step 4: Measure Conversion Metrics

Checking conversion metrics is a key part of A/B testing for chatbots, as it shows how well each version works. By employing statistical analysis techniques, developers can evaluate how well each chatbot performs against defined goals, such as engagement rates or bounce rates. This testing stage lets us make informed choices to improve the next versions of the chatbot.

To effectively measure conversion metrics, it’s essential to track various indicators, including user interactions, session duration, and conversion rates, which reflect the percentage of visitors completing desired actions.

Analyzing drop-off points can highlight areas needing improvement. Statistical analysis is important for studying these results because it checks how significant the differences between versions are.

Using methods like hypothesis testing and confidence intervals helps make sure that the results are dependable, helping chatbot developers improve user experiences and reach better engagement and conversion rates.

What are the Benefits of A/B Testing for Chatbots?

A/B testing offers benefits for chatbots, enhancing user experience and increasing conversion rates.

By carefully examining different chat-flow versions, companies can find useful information that shapes their chatbot strategies, contributing to the overall success of chatbots.

This testing approach makes conversation flow better by aligning with user choices, promoting constant improvement in customer interactions, which results in more satisfaction and involvement. For a deeper understanding of how chatbots can drive sales and improve customer interaction, consider exploring our Com.bot Conversational Commerce Bot.

1. Improves User Experience

A major advantage of A/B testing for chatbots is that it can make user interactions better by adjusting conversation paths based on actual user responses. This testing method enables developers to identify what works best for their audience, leading to more efficient interactions and higher engagement rates.

By constantly improving chatbot replies and features, companies can make users happier, which encourages them to return and use the service again.

By conducting tests in an organized way, teams can experiment with various conversation setups, timing of prompts, and types of responses, concentrating on what users like.

Each A/B test is a useful chance to learn about how users interact, showing which parts work well and which parts need improvement. As we look at feedback, we can quickly adjust the chatbot to fit what users like. This feature allows users to connect more effectively by providing personalized and helpful interactions, which greatly improves user satisfaction and performance metrics.

2. Increases Conversion Rates

A/B testing helps increase conversion rates in chatbots. It helps businesses modify how they communicate to encourage users to do things like provide their contact information or purchase items.

By looking at how different chat-flow versions work, developers can find the best methods for increasing conversions and improving customer interactions. This focused method eventually results in more sales and better business results.

For example, a company testing different chatbot scripts at the same time may find that an engaging, casual tone increases lead generation by 25% compared to a formal style.

By looking at click-through rates and user engagement numbers, businesses can see which parts of their content work best with their audience. Altering visual elements such as buttons or call-to-action prompts using A/B testing results can increase click rates by up to 30%.

Using data to make decisions helps improve how customers feel about their interactions and encourages repeat business, which ultimately boosts earnings.

3. Provides Valuable Insights

A/B testing provides useful information that helps businesses learn about user actions and choices, enabling informed decisions about improving chatbots. By looking at how different chat-flow versions perform, developers can find patterns in how users interact and spot areas that need performance testing.

The information helps improve chatbot development and also shapes marketing plans and user experience improvements. This approach lets teams update the chatbot using information that fits users’ needs, ensuring it performs correctly and is user-friendly.

Knowing the details of how users interact helps companies improve their marketing efforts by creating messages that match what users like. By using this information to keep improving their chatbots, organizations can stand out in the online market, build stronger relationships with their audience, and increase conversion rates.

4. Saves Time and Resources

A/B testing can save businesses time and resources by allowing them to identify the most effective chatbot strategies without the need for extensive trial and error.

By using information from data to guide decisions, developers can concentrate on improving successful chat-flow versions, which helps to make performance testing and resource management more effective. This efficiency results in reducing costs and speeding up the creation of chatbots.

For instance, when launching a new customer support chatbot, rather than developing a single version to get it right on the first try, teams can quickly test variations of response templates or engagement scripts. This specific method guarantees that end-users receive only the best choices.

As a result, companies can save money by not fully implementing solutions that haven’t been tried, which could need costly repairs after they are launched.

By using A/B testing, organizations make better use of their creative and technical resources, helping them improve user satisfaction with strong, user-friendly interfaces that truly improve the customer experience.

What are the Best Practices for A/B Testing for Chatbots?

To test chatbot versions effectively and get trustworthy results, stick to suggested practices.

Focus on testing one feature at a time, engage several users, run tests for a sufficient duration, and analyze results accurately.

These steps help developers make user interaction better and improve chatbot performance.

1. Test One Element at a Time

Testing one element at a time is a fundamental best practice in A/B testing for chatbots, as it allows developers to isolate the effects of individual changes on user experience and performance metrics.

Testing is important because even small changes can significantly impact how users interact with the chatbot.

For example, a tweak in the way a chatbot greets users can influence initial impressions and engagement levels. Experimenting with different call-to-action phrases can reveal which wording increases conversion rates.

By separately examining each element, like the chatbot’s tone or how quickly it replies, developers can identify what works best for their users.

This focused method improves overall results, leading to better satisfaction and keeping more customers, which are important for any digital customer service plan to succeed.

2. Use a Large Sample Size

Utilizing a large sample size in A/B testing is critical for obtaining statistically significant results and ensuring that the findings are representative of the broader user base.

A larger sample allows for more reliable statistical analysis, reducing the margin of error and increasing confidence in the observed effects of different chat-flow variants on user engagement. Therefore, companies can make better choices using solid information.

The expanded data set improves the accuracy of the findings and shows more clearly how changes impact various groups within the target audience.

By capturing diverse behavior patterns and responses, organizations can identify trends that may not be evident in smaller samples. A larger participant pool mitigates the risk of anomalies skewing results, allowing for greater validity when interpreting the effects of changes implemented in chat interactions.

A carefully planned A/B test with enough participants helps businesses improve their strategies and increase customer happiness.

3. Test for a Sufficient Duration

It’s important to test long enough in A/B testing to fully understand how users behave over time. Rushing the testing process can lead to misleading results, as variations in user engagement may occur due to temporal factors such as seasonality or promotional events.

By letting tests run for an extended period to observe the impact of updates, businesses can collect thorough performance testing information and make better decisions. Besides outside influences, things like shifts in marketing plans or website changes can affect how users engage with a product.

A carefully organized testing phase helps organizations notice trends from various user groups, revealing behaviors that might not be obvious at first.

It’s important to examine factors such as the number of visitors, the rate of sales or sign-ups, and the characteristics of users during testing. By considering these factors, companies can better understand and change their strategies, resulting in improved performance and user satisfaction.

4. Analyze and Interpret Results Correctly

Understanding and reviewing results from A/B testing is important for gaining useful information and improving chatbots. For developers working on improving user interactions, it is essential to grasp the details of the collected data.

Using methods like hypothesis testing and confidence intervals gives a better view of how different versions perform, showing both if a change is helpful and how much it matters.

Techniques such as multivariate testing can offer more detailed data, allowing the analysis of multiple elements simultaneously.

Focusing on statistical significance and effect size can help avoid premature conclusions that might negatively affect keeping users engaged with chatbots. Reviewing details allows for fast choices and helps plan for upcoming achievements.

What are the Common Mistakes in A/B Testing for Chatbots?

While A/B testing is a great way to make chatbots better, there are some frequent mistakes that can reduce its effectiveness and produce wrong outcomes.

These errors include:

- Not setting clear objectives

- Testing too many options at once

- Not examining results correctly

- Ignoring the need for proper A/B testing tools

By recognizing these mistakes, developers can have a more successful testing process that improves user experience and achieves better outcomes.

1. Not Defining Clear Goals

One of the most significant mistakes in A/B testing for chatbots is failing to define clear goals at the outset, which can lead to a lack of focus and direction in the testing process. Without clear goals, developers might find it hard to assess success or figure out which versions are actually working well. Setting clear goals is important for directing the whole testing effort and gaining useful information from it.

When organizations do not define their goals, they might struggle to match their testing results with overall business plans, which can hurt performance improvement efforts.

To prevent this error, set clear goals that are specific, measurable, achievable, relevant, and have deadlines to align with the company’s main objectives.

By defining what success looks like, such as increasing user engagement or improving conversation completion rates, teams can focus their efforts on meaningful changes that drive tangible results. This change improves the testing system and promotes a workplace that relies on data for making decisions.

2. Testing Too Many Variants at Once

Testing too many variants at once is a common mistake in A/B testing for chatbots, as it can create confusion and make it difficult to determine which changes are responsible for observed user behavior.

When too many elements are modified simultaneously, isolating the impact of individual changes on user engagement becomes challenging. This can lead to inconclusive results and hinder effective performance optimization.

In fact, the absence of clarity regarding which variable is driving any shifts in user interaction can render the entire testing process ineffective. Without a focused plan that tackles one aspect at a time, teams might reach wrong decisions, leading to wasted resources and time.

This is especially important in improving chatbot performance, where small adjustments can lead to major changes. By using a careful approach to testing, you can make sure each change is thoroughly checked, leading to decisions based on data that improve user experience and engagement.

3. Not Analyzing Results Properly

Not properly examining the results is a major mistake that can throw off the A/B testing process for chatbots, leading to wrong decisions and missed chances to improve performance.

Developers need to use the right statistical analysis methods to correctly understand the data collected, identify important differences between options, and evaluate what these mean for user experience. This includes tracking basic metrics like conversion rates and user interaction, and also figuring out the reasons behind the data.

Using methods like regression analysis or repeated tests can help understand how changes work over time. Common pitfalls such as ignoring external factors or failing to segment audiences can lead to skewed interpretations of the results, emphasizing the need for diligence.

Concentrating on the main goals of testing helps create practical plans that improve the success of performance testing projects.

4. Not Utilizing A/B Testing Tools

Not using the right A/B testing tools is another common mistake that can greatly reduce the success of chatbot testing. These tools provide important resources for managing tests, verifying results, and enhancing user interactions effectively.

By using the right technology, developers can make the testing process simpler and make sure they get useful results from their A/B tests. Popular A/B testing tools include:

- Optimizely

- Google Optimize

- VWO

These tools are known for being easy to use and having strong features.

Businesses can use these tools to test different elements of their chatbots, including how messages are phrased, where buttons are placed, and the general user experience. This helps them find out which options work best for their users.

Using these tools is more than just a simple comparison; they help with detailed analysis and work well with analytics platforms, which can lead to higher user retention and satisfaction. In the end, using A/B testing well can greatly improve how users engage and the overall results.

Frequently Asked Questions

1. What is Com.bot Chatbot A/B Testing?

Com.bot Chatbot A/B Testing is a feature that allows you to create split tests for different chat-flow variants, automatically routes traffic, and compares conversion metrics side-by-side in its analytics dashboard.

2. How does Com.bot Chatbot A/B Testing work?

Com.bot Chatbot A/B Testing works by creating different versions of your chatbot’s conversation flow and randomly routing traffic to each variant. It then compares the conversion metrics of each variant in its analytics dashboard.

3. Why is A/B Testing important for chatbots?

A/B Testing helps chatbots by trying out different conversation paths to make user interactions better, increase customer happiness, and boost conversion rates.

4. Can I run multiple A/B tests simultaneously on Com.bot?

Yes, you can run multiple A/B tests simultaneously on Com.bot. It allows you to create and test different chat-flow variants for different scenarios, audiences, or goals.

5. Do I need coding skills to use Com.bot Chatbot A/B Testing?

No, you do not need coding skills to use Com.bot Chatbot A/B Testing. It is a user-friendly platform that allows you to create, test, and analyze chatbot conversation flows without any coding knowledge.

6. How can Com.bot Chatbot A/B Testing benefit my business?

Com.bot Chatbot A/B Testing can help your business improve your chatbot’s conversation style, leading to more user interaction and increased conversion rates. It gives useful information and facts to help you make informed choices for your chatbot plan.