Feedback for AI Systems: Importance and Improvement Techniques

In the fast-changing field of artificial intelligence, good feedback for AI systems is key to improving how they make decisions and perform. By setting up strong monitoring systems, developers can use user feedback and machine learning methods to improve their algorithms. This article discusses why feedback is important in developing AI, ways to gather it, and practical steps for continuous improvement to make sure your AI systems meet expectations and perform well.

Key Takeaways:

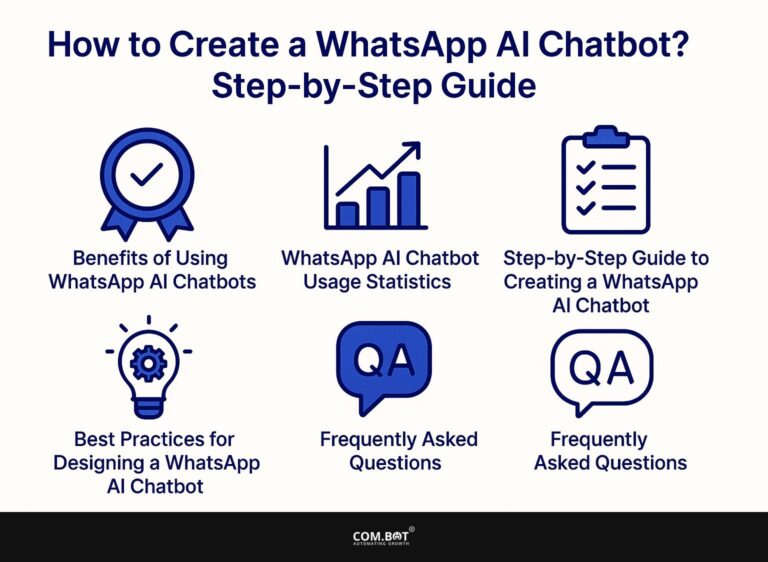

- 1 The Importance of Feedback in AI

- 2 Types of Feedback Mechanisms

- 3 AI Feedback Mechanisms Statistics

- 4 Techniques for Gathering Feedback

- 5 Implementing Feedback for Improvement

- 6 Challenges in Feedback Implementation

- 7 Future Trends in AI Feedback Systems

- 8 Frequently Asked Questions

- 8.1 What is the importance of feedback for AI systems?

- 8.2 How does feedback help improve AI systems?

- 8.3 What are some techniques for giving feedback to AI systems?

- 8.4 Why is continuous feedback important for AI systems?

- 8.5 How can feedback be used to prevent bias in AI systems?

- 8.6 Can user feedback be used to improve AI systems?

Definition and Overview

AI feedback systems encompass methods and technologies that allow AI to learn from human input and system performance, focusing on continuous improvement.

In healthcare, AI systems examine the results of treatments and how patients react, giving doctors information to tailor care to each individual.

Tools like IBM Watson can suggest optimal treatment plans based on historical data and real-time patient feedback.

In e-commerce, platforms like Salesforce Einstein use customer interaction data to improve product recommendations, which increases sales by matching well with what consumers like.

These systems help organizations make better choices and use a flexible method for providing services. This approach aligns with the principles outlined in our analysis of Feedback for AI Systems: Importance and Improvement Techniques.

Significance in AI Development

Feedback systems are important in AI development, affecting the accuracy and the ability of models to adjust through immediate changes and updates.

For instance, reinforcement learning (RL) has dramatically improved performance in autonomous vehicles. By rewarding good results, like the best use of brakes and smooth acceleration, these systems can improve how they make decisions.

Research indicates that RL models can achieve improvement rates of over 20% in driving efficiency after integrating user feedback. Tools like OpenAI’s Gym let developers create different situations, helping them collect feedback and improve algorithms fast.

This repeated method encourages ongoing education, resulting in safer and more dependable AI systems.

The Importance of Feedback in AI

Effective feedback systems are important for improving AI performance and user satisfaction, influencing trust and engagement. This is a topic explored in depth in our discussion on feedback for AI systems.

Enhancing Accuracy and Performance

Including feedback in AI systems can improve accuracy by up to 30%, as shown by Amazon’s tools that improve product suggestions.

Amazon achieves this by utilizing user ratings and purchase patterns to fine-tune its algorithms.

For instance, when users rate products, the system collects this data, adjusting its recommendations accordingly. This feedback loop actively learns customer preferences, leading to improved suggestions.

Running A/B tests on recommendation algorithms helps Amazon find out which strategies work best, leading to improvements. These methods make users happier, increase sales, and encourage repeat business.

User Satisfaction and Trust

By setting up strong feedback systems, companies can raise user satisfaction by 25%. This is shown by better customer support systems in areas such as finance.

For example, a financial services company might use tools like Zendesk for tracking customer questions and obtaining useful information. By analyzing feedback trends, they can identify common pain points and adjust their services accordingly.

Regular surveys sent through platforms like SurveyMonkey can reveal customer sentiment, allowing businesses to fine-tune their support processes. This forward-thinking method builds trust and increases loyalty, as users believe their feedback influences the service they get.

Types of Feedback Mechanisms

AI systems use different ways to get feedback and make improvements. This includes input from users as well as systems that check performance automatically. Learn more: Harnessing various feedback mechanisms can significantly enhance AI performance by offering insights into user interactions and automated evaluations.

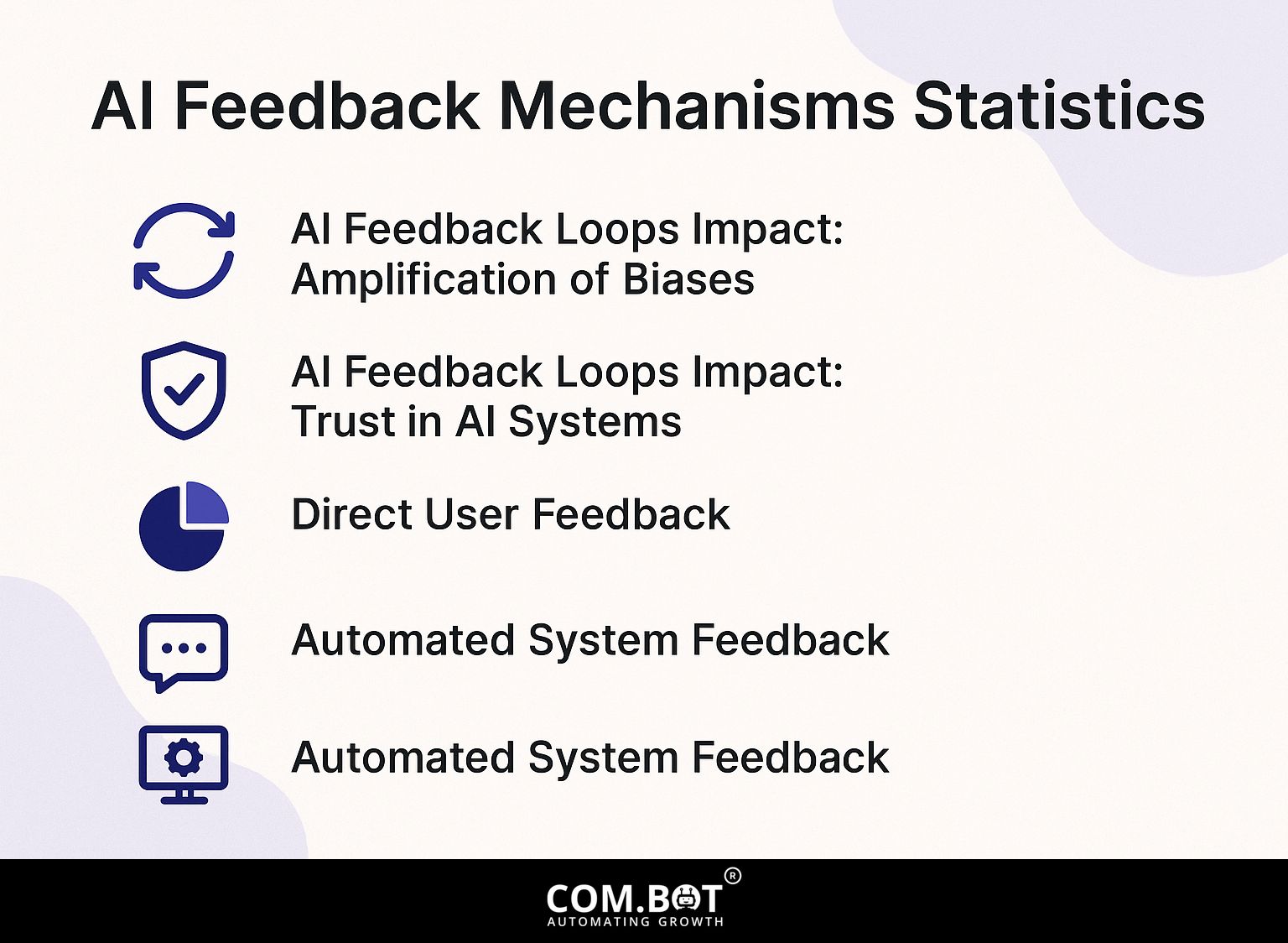

AI Feedback Mechanisms Statistics

AI Feedback Mechanisms Statistics

AI Feedback Loops Impact: Amplification of Biases

AI Feedback Loops Impact: Trust in AI Systems

AI Feedback Loops Impact: Accuracy and Bias in AI Systems

The AI Feedback Mechanisms Statistics give a thorough analysis of how AI systems affect biases, trust, and decision-making. The data shows that AI systems can increase biases and affect how much people trust and depend on them.

AI Feedback Loops Impact primarily discusses the issue of amplification of biases. The data shows that human bias increases to 56.3% after AI interaction, compared to 51.45% following human interaction. Furthermore, AI bias in emotion classification reaches a significant 65.33%. This means that AI systems are more likely to support existing biases when used for tasks such as identifying emotions, highlighting the importance of strong strategies to reduce bias in AI development.

- Trust in AI Systems: The statistics reveal that 32.72% of participants change their decisions based on AI advice, significantly higher than the 11.27% based on human advice. When AI is incorrectly identified as human, the adjustments decrease to 16.84%. This illustrates a complex interaction between perceived trustworthiness and decision influence, where participants are more inclined to trust AI systems, despite potential biases, than human advice.

Accuracy and Bias in AI Systems show that AI response bias with noisy labels stands at 50.0%, while it dramatically drops to 3.0% with accurate labels. This clear difference highlights the importance of accurate data labeling to reduce bias in AI responses. Furthermore, participants demonstrate 49.9% baseline correct responses, suggesting that AI systems can both significantly aid or hinder decision accuracy depending on data quality.

These statistics reflect the critical challenge of managing bias and trust in AI systems. Dealing with these issues is important for making the most of AI’s benefits and reducing its negative effects, leading to more ethical and useful AI applications.

Direct User Feedback

Getting input directly from users through surveys and interviews is essential for AI systems to learn about what users want and like.

Tools like SurveyMonkey or Google Forms facilitate this process by allowing organizations to create custom surveys that target specific user demographics.

For instance, using SurveyMonkey, a team can design a 10-question survey focusing on user satisfaction and feature requests for their AI product. By analyzing the responses, they can identify trends and areas for improvement, leading to more intuitive AI functionality.

Regularly using user feedback helps the AI improve and meet user needs better, increasing satisfaction and usefulness.

Automated System Feedback

Automated feedback systems use performance data and error reports to make real-time adjustments to AI models, making them more responsive.

Tools like Google Analytics and Mixpanel are very important in this process. For instance, you can set up event tracking in Google Analytics to monitor user interactions, such as clicks and session duration.

This data helps the AI understand which features perform well and which need adjustment. Error reporting tools like Sentry allow developers to identify and address issues in real time.

When these systems are combined, companies can make sure their AI models keep getting better by learning from actual user actions, which improves how well they work and makes users happier.

Techniques for Gathering Feedback

Gathering feedback through surveys and observing how people interact with the system is essential for improving AI systems and meeting user requirements. To delve deeper into effective strategies, exploring feedback importance and improvement techniques can provide valuable insights.

Surveys and Questionnaires

Surveys using tools like Typeform or Google Forms can gather information from more than 80% of users, greatly affecting AI performance measures.

To get helpful feedback from users, ask questions that get specific details. For example, ask users to rate your product on a scale from 1 to 5, and include open-ended questions like, ‘What features do you find most useful?’ or ‘What improvements would you suggest?’

After collecting responses, categorize the answers to identify common themes. Use programs like Excel or Google Sheets to examine data, which helps to identify patterns and focus on changes suggested by users.

Usage Analytics

By using tools like Mixpanel or Hotjar, usage analytics can follow user actions as they happen, offering important data to improve AI models.

By tracking specific measures like user involvement, length of visits, and conversion percentages, you can understand how users act.

For example, if you notice a high drop-off rate on a particular page, this signals potential issues with content or design.

Monitoring how features are used helps you see which ones are liked the most, directing later improvements.

Use A/B testing to improve user interfaces by confirming that changes increase engagement and satisfaction using the data collected.

Implementing Feedback for Improvement

Improving AI models involves repeatedly refining them using collected data.

Iterative Development Processes

When AI systems are consistently improved, performance usually increases by 20% each time when done properly.

To effectively use user feedback for model updates, start by creating an organized way to gather it. Use tools like Google Forms or Typeform to collect feedback after users engage with the AI.

This data should be analyzed to identify common issues and feature requests. Next, create a system to rank tasks based on things like how much they affect users and how difficult they are to put into action.

Create a feedback loop by updating users on changes made based on their suggestions, reinforcing their engagement and trust in the development process. This method allows for organized improvements with a focus on user needs.

Feedback Loops in AI Training

By using real-time data, feedback loops in AI training adjust models instantly, leading to a 15% increase in system accuracy.

For instance, consider a chatbot designed for customer service. Initially, it may struggle to understand certain queries.

As users interact, specific phrases get flagged for further training. By analyzing these interactions, the AI can learn to recognize variations of questions, continuously improving its response accuracy over time. This repetitive procedure improves the chatbot because it changes according to what users say.

To set up this feedback process, you need to keep track of interactions and update the training datasets frequently to match changing language and what customers need.

Challenges in Feedback Implementation

Setting up systems to gather feedback is important, but issues like protecting private information and interpreting the feedback correctly can cause delays.

Data Privacy Concerns

Data privacy concerns are paramount, as 60% of users express hesitance in providing feedback due to potential misuse of their information.

To address these concerns, prioritize transparency by clearly communicating how feedback will be used.

Use tools like TrustArc or OneTrust to follow regulations like GDPR.

Provide users with options to opt-out or anonymize their feedback. Regularly review and update your privacy policy to reflect current practices, and consider using double opt-in methods for feedback requests.

This method builds trust, encouraging users to share their thoughts while following important data protection laws.

Interpreting Feedback Effectively

Grasping feedback is essential, yet studies show that 30% of businesses misinterpret it and use it wrongly.

To avoid common pitfalls, start by categorizing feedback into themes or topics.

Use data visualization tools like Tableau or Microsoft Power BI to create graphs and highlight trends. If customers frequently talk about using the website, focus on making it easier to find their way around.

Have regular team meetings to discuss feedback, allowing different opinions to improve knowledge.

Using a feedback system allows updates to be shared with users, showing that their suggestions are appreciated and encouraging ongoing progress.

Future Trends in AI Feedback Systems

The coming advancements in AI feedback systems will include improved AI features for more detailed feedback examination and better customization. For an extensive analysis of how these improvements can be achieved, explore our comprehensive study on feedback improvement techniques which delves into effective methods to enhance AI feedback systems.

Integration of AI in Feedback Analysis

Using AI to analyze feedback can make decisions 40% faster, allowing organizations to quickly respond to user needs.

For example, companies such as Zendesk and HubSpot use machine learning algorithms to quickly analyze customer feedback.

Zendesk’s AI-powered interface sorts through support tickets, identifying recurring issues and prioritizing them for quicker resolution.

HubSpot’s feedback tool collects information from user interactions, allowing customized marketing plans to be made immediately.

By using these technologies, businesses can improve how quickly they respond and learn more about what customers like, helping them offer better services and develop products more effectively.

Personalization and Adaptation

Using AI to tailor experiences can increase user engagement by over 35% by changing content based on individual feedback.

To reach effective customization, use tools like user surveys or feedback forms to collect information. Tools such as Qualtrics can facilitate sophisticated surveys, while Google Forms provides a free option for basic data collection.

Use platforms like Segment to consolidate user data, allowing AI algorithms to learn patterns and preferences. Regularly review feedback to change content suggestions based on what users like, improving their experience.

Frequently Asked Questions

What is the importance of feedback for AI systems?

Feedback is important for AI systems because it helps them learn and do better. Without feedback, AI systems could not learn from new information or fix errors.

How does feedback help improve AI systems?

Feedback helps improve AI systems by providing them with the necessary information to update their algorithms and make better predictions. It helps them find and correct errors, leading to more accurate and quicker results.

What are some techniques for giving feedback to AI systems?

Some techniques for giving feedback to AI systems include providing labeled data, using reinforcement learning, and implementing human-in-the-loop feedback loops. These techniques allow for continuous learning and improvement of AI systems.

Why is continuous feedback important for AI systems?

Regular feedback is important for AI systems because it helps them adjust to new situations and get better over time. It also helps prevent bias and errors from accumulating in the system.

How can feedback be used to prevent bias in AI systems?

Feedback can be used to prevent bias in AI systems by ensuring diverse and unbiased training data, implementing fairness metrics, and regularly re-evaluating and updating algorithms based on new feedback. This helps create more ethical and inclusive AI systems.

Can user feedback be used to improve AI systems?

Yes, user feedback can be used to improve AI systems. Feedback from users can show how well the system works and what needs to be better. This can lead to more user-friendly and efficient AI systems.