Feedback for AI Systems: Importance and Improvement Techniques

In the fast-changing field of AI systems, useful feedback methods are important for improving how well machine learning works. By learning from how users interact, developers can make algorithms better through reinforcement learning, making sure these systems change and get better over time. This article looks at the importance of feedback in AI, explaining ways to check how well it works and improve how users interact with it, leading to more intelligent and responsive AI systems.

Key Takeaways:

- 1 Types of Feedback Mechanisms

- 2 Importance of Feedback for AI Improvement

- 3 AI Feedback System and Bias Amplification Study

- 4 Techniques for Gathering Feedback

- 5 Implementing Feedback Loops

- 6 Challenges in Feedback Implementation

- 7 Frequently Asked Questions

- 7.1 What is the importance of feedback for AI systems?

- 7.2 How does feedback help improve AI systems?

- 7.3 What are some techniques for effectively providing feedback to AI systems?

- 7.4 Why is it important to continuously provide feedback to AI systems?

- 7.5 What are some challenges in providing feedback for AI systems?

- 7.6 How can AI systems use feedback to make better decisions?

Definition and Scope

Feedback in AI means the information and assessments given to improve how models work and make choices.

This feedback can be categorized into two main types: supervised and unsupervised feedback.

In supervised feedback, developers correctly label data and give detailed notes to guide the model’s outputs. This method is important for improving facial recognition systems.

Unsupervised feedback, on the other hand, involves the model learning patterns without explicit labeling. Tools like TensorFlow and PyTorch facilitate both types, allowing teams to integrate feedback loops effectively.

By applying these strategies, organizations can make their operations much more efficient by ensuring that AI models continue to learn from updated data. Discover more about how feedback mechanisms enhance AI systems and their importance in continuous model improvement.

Significance of Feedback

Effective feedback systems are essential for improving AI performance, promoting ongoing progress, and increasing user satisfaction.

For example, Amazon examines customer reviews and buying habits to improve its recommendation system. The company actively solicits user feedback on product recommendations, ensuring that the AI learns from user interactions.

Companies like Google employ user feedback to tweak their search algorithms, enhancing relevance and accuracy. By regularly refreshing their models based on how users respond, these organizations offer a more user-friendly experience, leading to increased trust and loyalty.

Using similar feedback systems can help businesses change their AI tools to meet changing customer needs.

Types of Feedback Mechanisms

Feedback can be divided into two main types: feedback from people and feedback from machines, each playing a different part in developing AI. For an extensive analysis of how feedback influences AI improvement, our comprehensive study on Feedback for AI Systems explores the importance and techniques involved.

Human Feedback

Human feedback uses how people interact and what they experience to make AI models more accurate and useful.

To gather this feedback effectively, use platforms like Label Studio, which allows you to create customizable feedback forms and visualize the data.

Gather user feedback with surveys that focus on their thoughts about how helpful and applicable your AI is after they have tried it. By understanding these insights, as mentioned in our exploration of Feedback for AI Systems: Importance and Improvement Techniques, you can refine your approach to collecting valuable user input.

Use direct user interactions like interviews or focus groups to get qualitative data. These methods make the feedback process better and help improve your AI models using actual user experiences, resulting in more customized results.

Automated Feedback

Automated feedback applies data analysis and performance metrics to continuously update AI systems.

These systems enable live tracking and thoughtful changes.

For example, tools like Google Analytics monitor how users engage, showing which content connects best with viewers. By looking at data like bounce rates and how long users stay engaged, AI systems can change their algorithms to promote content that performs well.

Implementing tools like Hotjar can provide heatmaps, showing where users engage the most. This data is important for improving content plans and making sure AI systems work well and stay helpful as technology changes.

Importance of Feedback for AI Improvement

Feedback is essential for improving AI. It makes AI work better and reduces bias in models. To explore effective methods for feedback integration, consider examining our hidden gem on feedback improvement techniques that delves into best practices for enhancing AI systems.

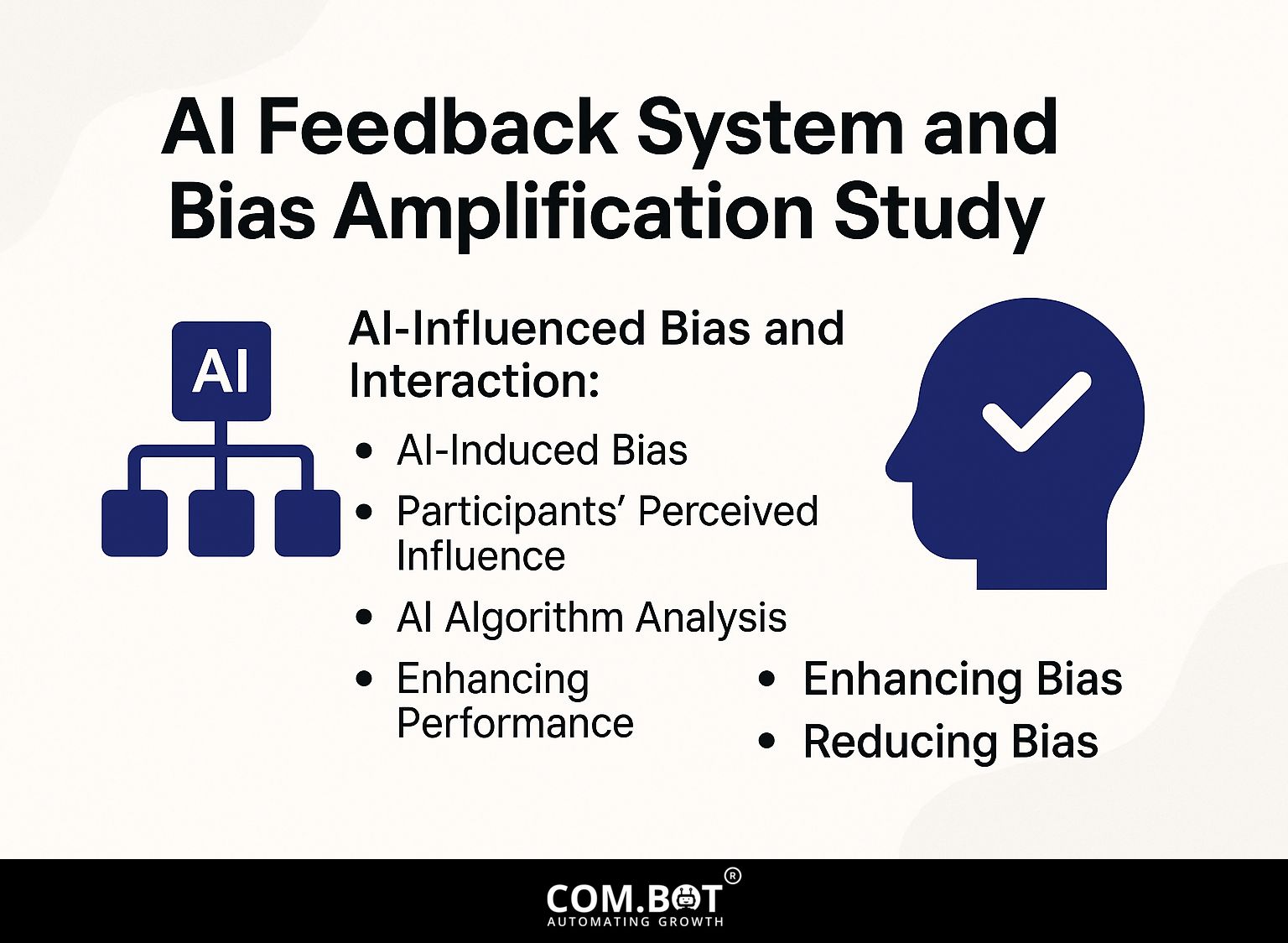

AI Feedback System and Bias Amplification Study

AI Feedback System and Bias Amplification Study

AI-Influenced Bias and Interaction: AI-Induced Bias

AI-Influenced Bias and Interaction: Participants’ Perceived Influence

AI-Influenced Bias and Interaction: AI Algorithm Analysis

The AI Feedback System and Bias Amplification Study offers a detailed view of how AI systems can affect and possibly increase human biases during interactions. This analysis is important for grasping the ethical issues involved in using AI for delicate tasks like recognizing emotions.

AI-Influenced Bias and Interaction data reveals that the initial human bias in an emotion aggregation task starts at 53.08%. This means that over half of the participants exhibited some biased judgment from the outset. However, when AI systems sort emotions, this bias becomes more obvious. 65.33% AI shows current biases and can make them worse. Interestingly, after interacting with AI, 56.3% of participants began classifying emotions as more sad, highlighting a direct influence of AI’s bias on human perception.

- The change in human response when AI disagreed was significant at 32.72% This suggests that when AI disagrees, it encourages people to think about their own biases. In contrast, when AI agreed with participants, the change was negligible at 0.3%, indicating that agreement tends to reinforce existing biases.

The Participants’ Perceived Influence section provides more details on this topic. The study shows that 56.3% of participants perceived an increase in bias post-AI interaction, compared to 49.9% bias levels without AI interaction, and 51.45% with human interaction alone. This highlights AI’s unique role in bias amplification, surpassing even the bias levels experienced in human-only interactions.

The AI Algorithm Analysis sheds light on the technical aspects of this bias. The CNN baseline accuracy on non-biased data is high at 96.0%. However, when using biased human data, the algorithm’s accuracy decreases to 65.33%, identical to the AI-amplified bias figure, with an amplification rate of 12.33%. This indicates that AI systems, while efficient under controlled conditions, may struggle with and even exacerbate biases present in the data they are trained on.

In short, this study highlights the importance of addressing and reducing bias in AI systems, especially in areas where human judgment and AI work together. Bias amplification by AI can have far-reaching consequences, necessitating strategies to minimize such effects and promote fairness and accuracy in AI applications.

Enhancing Performance

Using feedback can make AI work better and often speed up operations.

For instance, organizations implementing predictive monitoring systems can assess how AI models react to real-time data.

Tools like Amazon SageMaker provide built-in features for continuous monitoring, allowing teams to regularly evaluate algorithm performance. By setting up alerts based on performance metrics, businesses can quickly spot and fix problems, leading to faster updates in AI decision-making.

Think about connecting these systems with data visualization tools like Tableau or Power BI to better understand feedback patterns and make quick decisions.

Reducing Bias

Regular feedback can greatly lower bias in AI models, encouraging ethical use when they are put into action.

To make your system fair, use training data that includes a wide range of people and viewpoints. For instance, when training a language model, include texts from multiple cultures and socioeconomic backgrounds.

Use methods like surveys or focus groups to collect feedback on how well the model works and any biases people notice. Look at this feedback often to find common themes and make repeated improvements.

Tools like Gender Shades can help evaluate biases in AI outputs, guiding further adjustments in your model. By using these methods, you can create fairer AI systems.

Techniques for Gathering Feedback

You can collect feedback in different ways, such as through user surveys and watching how users interact, to help improve AI systems.

User Surveys and Interviews

User surveys and interviews are useful ways to gather detailed feedback directly from users, improving knowledge of their experiences.

To design effective user surveys, start by defining your objectives clearly. Use a mix of open-ended questions to gather detailed responses and close-ended questions for quantitative analysis.

For instance, ask ‘What features do you find most useful?’ alongside a scale from 1-5 for overall satisfaction.

Employ tools like SurveyMonkey or Google Forms to create and distribute your survey. Once collected, analyze results by grouping feedback into themes and quantifying common responses. This planned method helps gather more information that can lead to better products.

Monitoring User Interactions

Watching how users interact helps gather immediate feedback, offering useful information about how users behave and how the system works.

Tools like Hotjar and Google Analytics enable detailed tracking of user actions. Hotjar offers heatmaps to visualize where users click, scroll, and hover, which helps identify popular areas and potential issues on your site.

You can watch user recordings to see how each person moves through sessions and understand their actions better. To make the most of this data, use A/B testing with different versions informed by heatmap information, leading to ongoing improvements in user experience.

Review this process every month and improve your strategies using user feedback.

Implementing Feedback Loops

Setting up feedback loops is important for ongoing learning in AI systems and improving their ability to adjust.

Continuous Learning Models

Continuous learning models depend on regular feedback to improve AI performance in changing settings.

Businesses such as Google and Netflix use continuous learning techniques to make user interactions better and keep their systems running smoothly.

For example, Google’s search systems frequently change based on how people use them, which helps provide more useful results. Similarly, Netflix employs these models to analyze viewing patterns, adjusting its recommendation engine in real-time.

By using tools like TensorFlow and AWS SageMaker, they can quickly prepare and set up updated models, ensuring the content remains customized and engaging. This feedback-driven approach significantly improves system efficacy and user satisfaction.

Feedback Integration Strategies

Effective ways to use feedback involve handling data properly and improving user experiences.

One useful approach involves using APIs to include live feedback in your AI processes. For instance, integrating the Google Cloud Natural Language API can analyze user comments and categorize sentiment instantly.

Use A/B testing by alternating between two types of feedback requests to see which one gets better responses. Tools like Optimizely can make setting this up simple.

Check feedback data each month with Excel or Google Sheets to track changes over time and make your AI algorithms better based on user suggestions.

Challenges in Feedback Implementation

Using feedback systems in AI faces difficulties such as safeguarding data privacy and ensuring the feedback is accurately interpreted. Curious about how feedback importance and improvement techniques can address these challenges? Our analysis explains key factors.

Data Privacy Concerns

Data privacy concerns are paramount when gathering user feedback, necessitating stringent ethical considerations in AI development.

To comply with rules like GDPR when collecting feedback, follow some important steps.

- First, always obtain explicit consent from users before collecting any data.

- Implement clear privacy policies that explain how their feedback will be used.

- Use tools that hide user identities, like software that disguises data, to process feedback safely.

- Regularly review how you collect data to maintain compliance and keep things clear.

By prioritizing these steps, organizations can build trust and avoid costly legal ramifications.

Interpreting Feedback Effectively

Fully grasping feedback is essential for making informed choices in AI systems and assessing performance accurately.

To examine feedback data correctly, begin by setting specific performance measures, like accuracy, the rate of true positives, and levels of user satisfaction.

Use tools like Tableau for data visualization, allowing you to create dashboards that highlight patterns and trends in real-time.

Software like MonkeyLearn can analyze user feedback to show emotions, helping you better grasp the responses.

Regularly check this data and update your AI models based on the results to keep improving performance numbers.

Frequently Asked Questions

What is the importance of feedback for AI systems?

Feedback is essential for AI systems because it helps them learn and get better over time. Without feedback, AI systems cannot change and make correct decisions.

How does feedback help improve AI systems?

Feedback assists AI systems in finding and fixing mistakes, improving their algorithms, and making their predictions more accurate. This results in better and faster performance.

What are some techniques for effectively providing feedback to AI systems?

Some techniques for providing effective feedback to AI systems include providing specific and actionable feedback, using a variety of data sources, and incorporating human input into the learning process.

Why is it important to continuously provide feedback to AI systems?

Giving feedback to AI systems regularly is necessary because their performance can vary, and feedback helps them learn and get better. Without continuous feedback, AI systems may become outdated and less effective.

What are some challenges in providing feedback for AI systems?

Some problems in giving feedback for AI systems are biased data, not enough data, and trouble in explaining and grasping the decisions made by the system.

How can AI systems use feedback to make better decisions?

AI systems can improve their decision-making by analyzing feedback. They identify patterns and trends in feedback, look for ways to improve, and frequently update their algorithms to make more accurate and informed choices.