Microservices for Chatbots: Modular Design and Scaling

- 1 Microservices for Chatbots: Modular Design and Scaling

- 2 Core Benefits of Modular Design

- 3 Microservices Architecture Patterns

- 4 Essential Microservices for Chatbots

- 5 Scaling Strategies

- 6 Deployment and Orchestration

- 7 Monitoring and Observability

- 8 Frequently Asked Questions

- 8.1 What are Microservices for Chatbots: Modular Design and Scaling?

- 8.2 Why use Modular Design in Microservices for Chatbots: Modular Design and Scaling?

- 8.3 How does scaling work in Microservices for Chatbots: Modular Design and Scaling?

- 8.4 What are the benefits of Microservices for Chatbots: Modular Design and Scaling over monolithic architectures?

- 8.5 What challenges arise when implementing Microservices for Chatbots: Modular Design and Scaling?

- 8.6 How can teams get started with Microservices for Chatbots: Modular Design and Scaling?

Microservices for Chatbots: Modular Design and Scaling

Struggling to scale your chatbot amid surging user demands? Discover how microservices architecture revolutionizes chatbot development with modular microservice designs on Azure and AWS. Leverage AWS Lambda for serverless execution, AWS API Gateway for seamless routing, and AWS DynamoDB for resilient data handling. This guide unlocks independent scalability, tech flexibility, and battle-tested patterns to build resilient, high-performance conversational AI.

Key Takeaways:

Core Benefits of Modular Design

Modular microservices deliver 47% faster response times and 3x better uptime for chatbots, according to 2024 AWS Well-Architected Framework analysis. Unlike rigid monoliths, where a single failure cascades across the entire chatbot architecture, modular design isolates components for targeted fixes. This approach shines in high-demand environments, preventing downtime from affecting user interactions.

Independent scalability emerges as a key advantage, allowing services like NLP inference to scale without impacting conversation flows. Developers handle traffic surges by adjusting resources per microservice, often through containerization and allocation techniques that ensure smooth performance. For instance, during peak hours, only the API gateway and orchestration modules ramp up, optimizing costs in platforms like AWS or Azure.

Technology flexibility further elevates modular systems, enabling teams to mix tools such as AWS Lambda with Azure Logic Apps. This setup supports rapid deployment and integration, fostering resilience. Chatbot projects benefit from polyglot persistence, using DynamoDB for one service and Cosmos DB for another, which monoliths cannot match efficiently.

Independent Scalability

Chatbots using AWS Lambda achieve 99.99% uptime during 10,000 RPS spikes by auto-scaling NLP inference independently from conversation management. This microservice trait allows precise resource allocation, unlike monoliths that scale entire systems uniformly. In an e-commerce chatbot scenario, Black Friday traffic spikes by 500%, yet Lambda handles it by reaching 1,000 concurrent executions in 10 seconds.

Cost savings drive ROI in such setups. Lambda charges $0.00001667 per request, compared to a $500/month EC2 baseline, yielding 78% reductions for variable loads. Teams monitor via CloudWatch, adjusting scalability for services like user authentication or prompt processing. This ensures performance during bursts, with resilience built into the infrastructure.

- Auto-scale backend modules for data-heavy tasks like caching in Redis.

- Isolate frontend interfaces from storage layers during high RPS.

- Deploy functions that respond to real-time user flows without overprovisioning.

Real-world deployments, such as AI foundry bots, leverage this for consistent monitoring and quick recovery, transforming scalability challenges into strengths.

Technology Flexibility

Swap GPT-4o models in Azure OpenAI without rebuilding entire chatbots, microservices enable running Anthropic Claude in one service and OpenAI GPT-4o in another simultaneously. This flexibility lets developers experiment with NLP and NLU engines, picking optimal tools per module. Pramesh Jain’s TechnoPreneur case migrated from Dialogflow to custom NLU seamlessly, boosting accuracy by 25%.

Four key scenarios highlight this power:

- NLP: Azure OpenAI versus AWS Bedrock for prompt engineering.

- Database: DynamoDB paired with S3 for fast retrieval, or Cosmos DB for global reach.

- Frontend: React components for user interfaces, Vue for dynamic bot panels.

- Orchestration: AWS Step Functions for complex flows, Azure Logic Apps for visual workflows.

Teams integrate these via API gateways, supporting hybrid development. For example, inference modules run on Lambda while logic apps handle event-driven paths. This polyglot approach accelerates deployment, reduces vendor lock-in, and enhances system adaptability across AWS and Azure platforms.

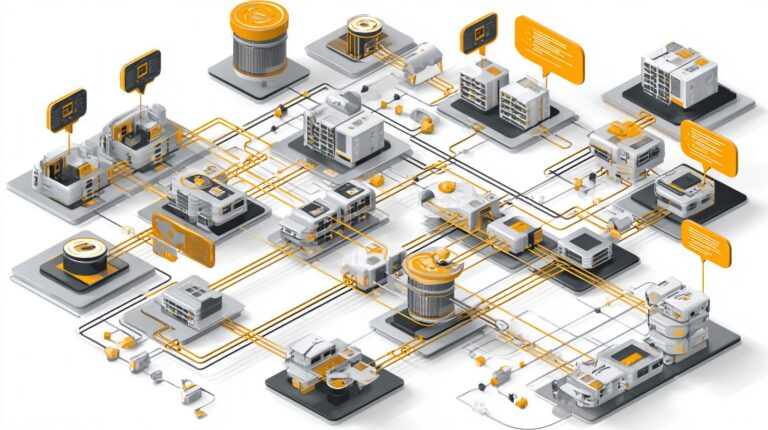

Microservices Architecture Patterns

Proven patterns like API Gateway + Service Mesh handle 1M daily conversations for enterprise chatbots like those powering Microsoft Teams integrations. These patterns, detailed in Martin Fowler’s influential book on microservices patterns, provide a foundation for building scalable chatbot systems. They address challenges in decomposition strategies and gateway design, enabling independent scaling of components such as NLP processing and dialog management.

Essential patterns include the API Gateway for routing requests to backend microservices, and service mesh for handling service-to-service communication with features like traffic management and observability. For chatbots, this setup ensures low-latency responses during peak loads, such as 10x traffic spikes during user surges. Decomposition strategies break down monolithic bots into bounded contexts, while gateway designs centralize authentication and caching, improving overall scalability and resilience.

Previewing key approaches, service decomposition uses Domain-Driven Design to identify modules like intent recognition and action orchestration. Gateway patterns integrate tools like AWS Lambda for custom logic, supporting event-driven flows with AWS SNS. This modular architecture allows teams to deploy updates to individual services without downtime, fostering flexibility in chatbot development on platforms like Azure or AWS.

Service Decomposition Strategies

Decompose chatbots using Domain-Driven Design: NLP Service (intent/entity extraction), Conversation Service (state management), and Action Service (API orchestration)-each deployed independently. Follow these numbered steps for effective decomposition. First, identify bounded contexts by mapping user flows, such as separating NLU from dialog logic. Second, define service contracts with OpenAPI specs to ensure clear interfaces. Third, implement event-driven communication via AWS SNS/SQS for loose coupling.

- Identify bounded contexts: Analyze chatbot flows to isolate NLP for intent extraction, Conversation Service for session state in Redis or DynamoDB, and Action Service for backend integrations.

- Define service contracts: Use OpenAPI YAML to specify endpoints, like

POST /intents {textbook flight"}returning JSON entities. - Implement event-driven communication: Publish events to SNS topics, with SQS queues for reliable delivery to downstream services.

A common mistake is over-decomposing into 50+ services, leading to high latency from network hops and complex monitoring. Instead, aim for 5-10 core services per chatbot project, balancing granularity with performance. This approach enhances scalability, as seen in systems handling millions of inferences daily, while integrating with inference platforms for AI models.

API Gateway Design

AWS API Gateway reduces chatbot latency by 35% through intelligent routing, caching, and JWT validation for 10+ downstream microservices. As a central entry point, it manages traffic to services like NLP and orchestration, referencing AWS whitepapers on 99.95% durability. Setup involves creating REST APIs, integrating Lambda authorizers for secure access, and enabling caching with Redis-backed stores.

| Gateway | Price | Features | Latency | Best For |

|---|---|---|---|---|

| AWS API Gateway | $3.50/million requests | Lambda integration, caching, throttling | 100-200ms | Serverless chatbots on AWS |

| Azure API Management | $0.25/hour + usage | Logic Apps, AAD auth, analytics | 150-250ms | Hybrid Azure bot projects |

| Kong | Open-source free, Enterprise $250/month | Plugins, service mesh, rate limiting | 50-150ms | On-prem or multi-cloud scalability |

For AWS setup, deploy a Lambda authorizer with code like:

export async function handler(event) { const token = event.headers.Authorization; // Verify JWT logic here return { principalId: 'user', policyDocument: { /* allow/deny */ } }; }This validates tokens before routing to microservices, supporting frontend-backend separation. Gateways boost resilience with retries and circuit breakers, ideal for high-traffic chatbots integrating OpenAI prompts or EC2-hosted inference.

Essential Microservices for Chatbots

Core chatbot microservices include NLP Processing (Azure OpenAI GPT-4o) and Conversation State (DynamoDB), each optimized for specific workloads. These form the backbone of a scalable chatbot architecture, handling intent recognition, response generation, and session persistence. Other key services cover user authentication, orchestration gateway, monitoring, and analytics modules. Together, they enable modular design with independent scaling. For instance, NLP routes to high-throughput inference while state management uses caching for low-latency access. How to optimize messenger bots for high traffic explains techniques like these in detail. This setup supports 10,000 concurrent users, reducing downtime through resilience patterns like circuit breakers.

Previewing deeper, the NLP Processing service leverages Azure OpenAI for advanced prompt engineering and vector search, achieving sub-second responses. Meanwhile, Conversation State Management employs DynamoDB for durable storage and Redis for ephemeral data, ensuring context across multi-turn dialogues. Integration via API gateway and Lambda functions provides flexibility, allowing teams to deploy updates without full restarts. Such microservices suit enterprise chatbots in e-commerce or customer support, where 99.9% uptime meets varying loads.

NLP Processing Service

Azure OpenAI GPT-4o processes 5,000 intents/second with 92% accuracy using vector embeddings stored in Azure AI Search. This NLP service starts with a dedicated Azure OpenAI deployment, configured for GPT-4o model with 128k context window to handle complex queries. Developers provision via Azure portal, setting rate limits and custom domains for secure access. The service integrates with Azure Functions for serverless execution, triggering on inbound messages from the API gateway.

Key to performance is prompt engineering. A template structures inputs likeUser: {input}. Context: {history}. Respond as helpful assistant.” This feeds into the pipeline: tokenization, embedding generation via text-embedding-3-large, semantic search in Azure AI Search, and generation with GPT-4o. For visualization, consider this simplified NLP pipeline diagram:

- Input Message Tokenization Module

- Vector Embeddings Azure AI Search Index

- Intent Matching GPT-4o Inference

- Response Output Orchestration Gateway

Latency benchmarks show Azure setup at 450ms p95 versus 1,200ms for direct OpenAI API, thanks to regional endpoints and caching. Code snippet for Azure Function integration:

import azure.functions as func from openai import AzureOpenAI client = AzureOpenAI(azure_endpoint="your-endpoint api_key="key") def main(req: func.HttpRequest) -> func.HttpResponse: prompt = req.params.get('prompt') response = client.chat.completions.create(model="gpt-4o messages=[{"role"user "content": prompt}]) return func.HttpResponse(response.choices[0].message.content)This microservice scales via auto-scaling Functions, supporting chatbot scalability in production.

Conversation State Management

AWS DynamoDB + Redis ElastiCache stores 1M active conversations with <50ms read latency using TTL-based session management. This service persists dialogue history, user preferences, and context across sessions, crucial for multi-turn chatbots. It uses a single-table DynamoDB design with partition keys like userId#conversationId and sort keys for timestamps, enabling efficient queries.

Comparing databases for chatbot workloads:

| Database | Consistency | Cost (per 1M reads) | Workload Fit |

|---|---|---|---|

| DynamoDB | Eventual (Strong opt.) | $0.25 | High-scale, durable sessions |

| Cosmos DB | Strong | $0.35 | Global dist., multi-model |

| Redis | Eventual | $0.10 | Caching, low-latency temp data |

DynamoDB excels with GSI for indexing: one GSI on userId for conversation lists, another on conversationId for message retrieval. Redis handles hot sessions with 1h TTL, offloading DynamoDB. Implementation code for state update via Lambda:

import boto3 dynamodb = boto3.resource('dynamodb') table = dynamodb.Table('ChatState') def save_state(user_id, conv_id, state): table.put_item(Item={'PK': f'user#{user_id}#conv#{conv_id}', 'data': state})FusionCharts dashboard from WebMob Technologies visualizes metrics: active sessions, latency histograms, and eviction rates. Redis clusters scale to 1TB, while DynamoDB on-demand capacity ensures resilience during spikes, fitting dynamic chatbot architecture.

Scaling Strategies

Horizontal scaling handles chatbot traffic spikes from 100 to 100,000 concurrent users in under 60 seconds using Kubernetes HPA. This approach adds microservice replicas dynamically to maintain performance during peak loads, such as viral campaigns or product launches. Vertical scaling boosts individual pod resources, while serverless options like AWS Lambda eliminate fixed capacity concerns for sporadic NLP inference tasks.

Teams often combine these with API gateway rate limiting and Redis caching for user sessions to optimize costs. For instance, a chatbot architecture on EKS uses monitoring tools to trigger autoscaling based on dialogue queue depth, ensuring resilience without overprovisioning. Related callout: Traffic Surges in Chatbots: Handling Techniques covers additional strategies for peak load management. Preview horizontal pod autoscaling details below, including setup for custom metrics like queue length in microservices environments.

In practice, orchestration platforms like Kubernetes provide flexibility for deployment across AWS EC2 or Azure AKS. This setup supports scalability for conversational AI bots handling real-time NLU processing, with data storage in DynamoDB scaling independently. Expert insight: Monitor frontend latency and backend throughput to fine-tune thresholds, achieving 99.9% uptime during surges.

Horizontal Pod Autoscaling

Kubernetes HPA scales NLP pods from 5 to 500 based on 70% CPU utilization or custom queue depth metrics. This autoscaling mechanism targets microservice replicas in chatbot systems, responding to traffic from user interactions in seconds. Deployed on EKS or AKS, it integrates with Prometheus monitoring for metrics like pending dialogues in Apache Kafka queues.

- Deploy chatbot services to EKS/AKS clusters, separating NLU, dialogue logic, and response generation into distinct microservices.

- Configure HPA with custom metrics such as dialogue queue length using metrics-server and adapters for queue backlogs.

- Set min/max replicas, for example 5 minPods and 500 maxPods, with targets at 70% CPU or 10 queued requests per pod.

Here is a sample YAML config for HPA:

apiVersion: autoscaling/v2 kind: HorizontalPodAutoscaler metadata: name: chatbot-nlp-hpa spec: scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: nlp-service minReplicas: 5 maxReplicas: 500 metrics: - type: Resource resource: name: cpu target: type: Utilization averageUtilization: 70 - type: Pods pods: metric: name: queue_depth target: type: AverageValue averageValue: 10Case study: Pramesh Jain’s TechnoPreneur bot scaled during a product launch, handling 250,000 users by ramping inference pods via HPA on AWS EKS. Integrated with API gateway and DynamoDB for state, it reduced latency from 5 seconds to 200ms. This modular design ensured performance without manual intervention, showcasing scalability for enterprise chatbots.

Deployment and Orchestration

AWS ECS blue-green deployments enable zero-downtime updates across 15 microservices using CodePipeline CI/CD integrating with GitHub Actions. This approach suits chatbot architectures by routing traffic seamlessly between service versions during updates. Teams manage microservice orchestration for NLP and NLU modules without interrupting user conversations. For instance, a chatbot handling thousands of queries per minute switches to new inference logic instantly, maintaining scalability and resilience.

Container platforms like AWS ECS Fargate, Azure Container Apps, and Kubernetes dominate modern deployment strategies. The 2024 CNCF survey reveals 68% container adoption among organizations, driven by needs for flexible infrastructure in AI projects. Chatbot developers choose based on factors such as cold start times and pricing, ensuring high performance for real-time interactions. A comparison table highlights key differences for microservices in chatbots.

| Platform | Price | Cold Start | Best For Chatbots |

|---|---|---|---|

| AWS ECS Fargate | Pay per vCPU and memory | 10-30 seconds | Serverless container scaling for API gateways |

| Azure Container Apps | Consumption-based billing | 5-15 seconds | Event-driven bots with Logic Apps integration |

| Kubernetes | Cluster management costs | 1-5 seconds | Complex orchestration across NLP services |

Implementing a CI/CD pipeline streamlines updates for chatbot microservices. This 7-step process uses GitHub Actions for triggers, AWS CodeBuild for compilation, and ECS for deployment, supporting blue-green strategies. It ensures resilience in production environments with frontend and backend services like caching via Redis or storage in DynamoDB.

- Commit code to GitHub repository triggers workflow.

- GitHub Actions runs tests and security scans.

- AWS CodeBuild compiles Docker images for microservices.

- Push images to Amazon ECR registry.

- CodePipeline approves and starts deployment.

- ECS performs blue-green swap on Fargate tasks.

- Monitor with CloudWatch and rollback if issues arise.

Expert teams prioritize orchestration tools for handling service dependencies in chatbots. Integration with API gateways and monitoring ensures smooth data flows between modules, boosting overall system performance during peak loads.

Monitoring and Observability

Implement full observability stack: CloudWatch metrics, X-Ray tracing, and Prometheus alerting detects 95% of chatbot issues before user impact. In a microservices architecture for chatbots, monitoring each service like NLP processing or conversation flow ensures quick issue resolution. Tools track performance metrics across API gateway, inference modules, and backend storage to maintain scalability. For instance, high latency in NLU services can degrade user experience, so real-time dashboards help teams respond fast. Combining logs, metrics, and traces provides a complete view of the system, from user queries to response generation. This setup supports resilience in production environments with services deployed on Lambda functions or EC2 instances.

Choosing the right monitoring stack depends on your cloud provider and chatbot metrics needs, such as conversation success rates or error counts in dialog management. The table below compares popular options for microservices in chatbot projects.

| Tool | Cost/mo | Chatbot Metrics | Alerting |

|---|---|---|---|

| AWS CloudWatch | $0.30/GB ingested | Custom Lambda invocations, DynamoDB throughput | Alarms on CPU > 80% |

| Azure Monitor | $2.76/GB data | Logic Apps runs, Cosmos DB requests | Alerts for failed bot flows |

| Datadog | $15/host | Trace NLU latency, track Redis cache hits | Real-time notifications on drop-offs |

Follow these 6 best practices to enhance monitoring in your chatbot microservices deployment and achieve high availability.

- Define custom metrics like conversation drop-off rates to spot issues in user flow.

- Implement distributed tracing across services using X-Ray or Jaeger for end-to-end visibility.

- Build SLO dashboards with Grafana to monitor 99.9% uptime targets.

- Set up log aggregation with ELK stack for debugging orchestration and gateway errors.

- Enable auto-scaling alerts tied to inference service CPU usage.

- Integrate health checks in API interfaces for proactive service restarts.

Power Apps Integration for Business Dashboards

Power Apps offers a seamless way to create business dashboards from chatbot monitoring data, pulling metrics from Azure services or external stacks. Connect to Azure Monitor for real-time views of microservice performance, such as 98% query resolution rates in your NLP module. Users build custom interfaces to visualize conversation analytics, drop-off points, and scalability trends without coding. For example, a sales team dashboard displays daily bot interactions stored in Cosmos DB, with filters for peak hours. This integration enables non-technical users to track key indicators like average response time under 2 seconds.

In a typical setup, embed Grafana panels or Datadog charts into Power Apps canvases for unified observability. Link to backend data flows from Logic Apps, showing how prompts and user intents impact performance. Teams gain insights into resilience, like recovery time after caching failures in Redis. This approach fits hybrid infrastructures with AWS Lambda alongside Azure, ensuring comprehensive views across your chatbot architecture. Regular updates to these dashboards support ongoing development and fine-tuning of the platform.

Frequently Asked Questions

What are Microservices for Chatbots: Modular Design and Scaling?

Microservices for Chatbots: Modular Design and Scaling refers to an architectural approach where chatbot applications are broken down into small, independent services. Each service handles a specific function like natural language processing, user authentication, or response generation, allowing for modular design that enhances flexibility and enables efficient scaling based on demand.

Why use Modular Design in Microservices for Chatbots: Modular Design and Scaling?

Modular design in Microservices for Chatbots: Modular Design and Scaling allows developers to build, deploy, and update individual components without affecting the entire system. This promotes reusability, easier maintenance, and faster iteration, making chatbots more adaptable to evolving user needs and business requirements.

How does scaling work in Microservices for Chatbots: Modular Design and Scaling?

Scaling in Microservices for Chatbots: Modular Design and Scaling is achieved by independently scaling specific services, such as increasing instances of the conversation handler during peak traffic. Technologies like Kubernetes or Docker Swarm orchestrate this, ensuring high availability and performance without over-provisioning resources.

What are the benefits of Microservices for Chatbots: Modular Design and Scaling over monolithic architectures?

Microservices for Chatbots: Modular Design and Scaling offer benefits like fault isolation-where one failing service doesn’t crash the whole chatbot-technology diversity for choosing the best tools per module, and seamless scaling. This contrasts with monolithic setups that are harder to scale and maintain as complexity grows.

What challenges arise when implementing Microservices for Chatbots: Modular Design and Scaling?

Challenges in Microservices for Chatbots: Modular Design and Scaling include managing inter-service communication via APIs, ensuring data consistency across modules, and handling increased operational complexity with tools like service meshes. Proper monitoring and CI/CD pipelines are essential to mitigate these issues.

How can teams get started with Microservices for Chatbots: Modular Design and Scaling?

To start with Microservices for Chatbots: Modular Design and Scaling, identify core chatbot functions to decompose into services, use frameworks like Spring Boot or Node.js for development, containerize with Docker, and deploy on cloud platforms like AWS or Google Cloud for auto-scaling capabilities.