Feedback for AI Systems: Importance and Improvement Techniques

As artificial intelligence quickly changes, good human feedback is key to improving AI systems. By applying techniques from machine learning and reinforcement learning, Label Studio methods can significantly improve model performance. This article looks into why feedback is important in AI development, discussing ways to improve accuracy, user experience, and address ethical issues. Learn how feedback can improve AI systems and influence intelligent technology.

Key Takeaways:

- 1 Importance of Feedback in AI

- 2 AI Feedback Effectiveness: Comparative Study

- 3 Types of Feedback Mechanisms

- 4 Techniques for Collecting Feedback

- 5 Analyzing Feedback Data

- 6 Implementing Feedback for Improvement

- 7 Challenges in Feedback Implementation

- 8 Future Directions and Innovations

- 9 Frequently Asked Questions

- 9.1 What is the importance of feedback for AI systems?

- 9.2 How does feedback benefit AI systems?

- 9.3 What are some techniques for improving feedback in AI systems?

- 9.4 Can feedback be used to identify biases in AI systems?

- 9.5 Why is it essential to continuously provide feedback for AI systems?

- 9.6 How can feedback improve how users interact with AI systems?

Definition and Purpose

AI feedback systems gather information from user interactions to constantly improve machine learning models.

These systems use different techniques to collect performance data and user opinions.

For instance, A/B testing can be implemented to determine which model variations yield higher user satisfaction. Watching how often users click on links and how long they stay on a page helps understand how they interact with the content. Tools like Google Analytics or Mixpanel facilitate this tracking.

Direct user surveys can gather detailed feedback, helping developers improve algorithms based on actual user experiences and choices.

Role of Feedback in AI Development

Feedback is important for AI growth, helping to make regular updates and making sure AI programs work well for people.

User feedback helps identify pain points, allowing developers to create targeted updates.

For example, using platforms like UserTesting can help collect immediate feedback from different user groups.

Adding surveys or feedback forms directly in the app can make it easier to quickly address user interface problems or requests for new features.

Creating closed feedback loops, where users are informed about how their suggestions are used, builds teamwork and raises satisfaction.

A feedback system that adjusts based on input improves the AI’s usefulness and accuracy.

Importance of Feedback in AI

Feedback systems matter because they directly impact how accurate AI models are and strongly influence how users interact with them.

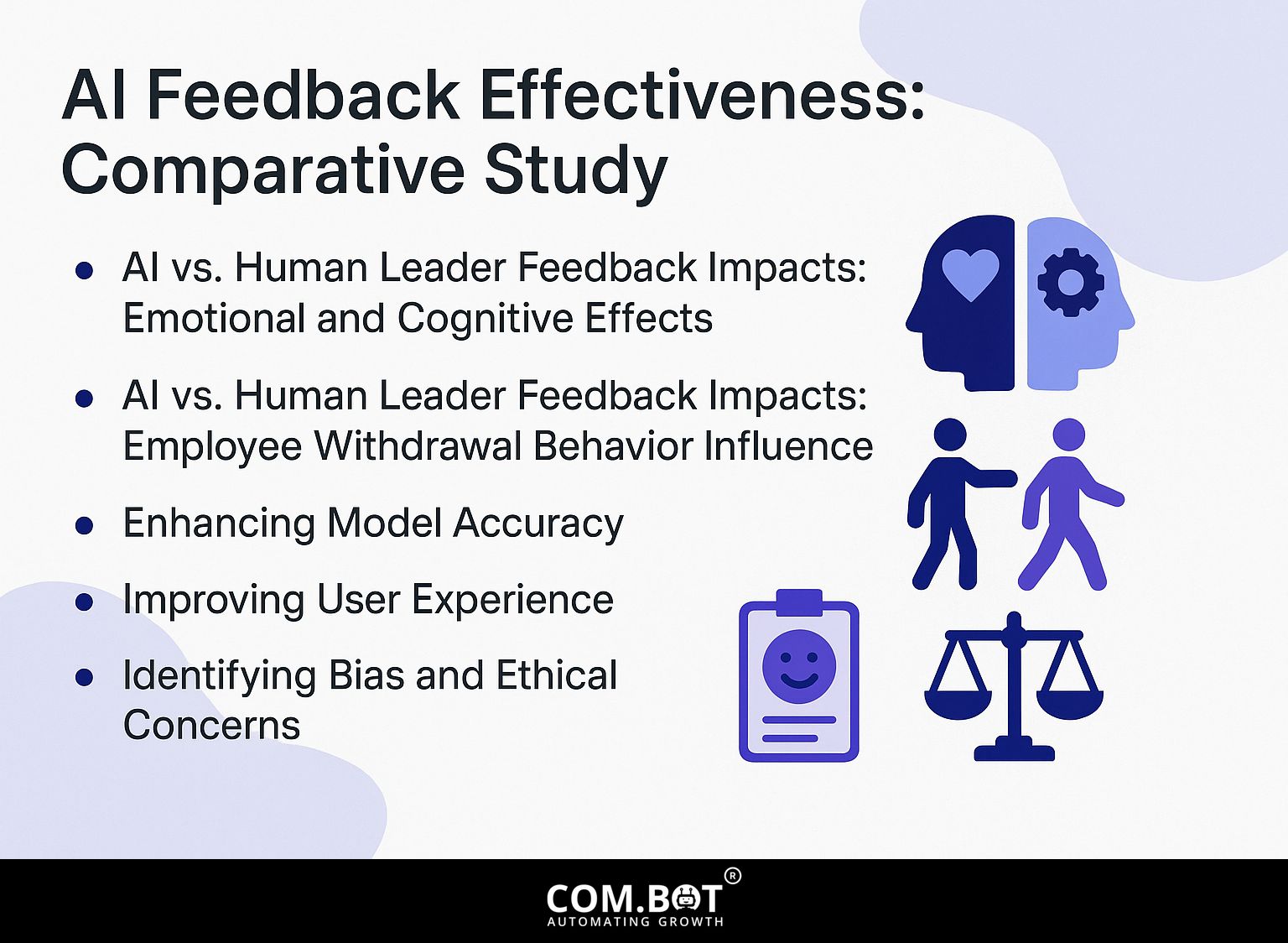

AI Feedback Effectiveness: Comparative Study

AI Feedback Effectiveness: Comparative Study

AI vs. Human Leader Feedback Impacts: Emotional and Cognitive Effects

AI vs. Human Leader Feedback Impacts: Employee Withdrawal Behavior Influence

The AI Feedback Effectiveness: Comparative Study offers interesting findings on how feedback from AI systems matches up with feedback from human bosses in terms of emotional and mental impact, as well as its effect on employees wanting to leave. This data is crucial for knowing how different feedback sources affect what employees think and do.

AI vs. Human Leader Feedback Impacts focuses on two main areas: emotional and cognitive effects, and employee withdrawal behavior. The emotional and cognitive effects data reveals that negative feedback from human leaders induces a higher level of shame (3.47) compared to AI systems (3.05). This indicates that employees may perceive AI feedback as less personally critical, potentially due to its impersonal nature.

The pattern shows a slight return to decreasing self-confidence. AI feedback is associated with a lower reduction in self-efficacy (2.37) compared to human leader feedback (2.61). This suggests that while AI feedback might still impact self-confidence, it does so to a lesser degree, perhaps because employees see AI feedback as more objective and less judgmental.

Regarding Employee Withdrawal Behavior Influence, feedback impacts were measured in terms of inducing withdrawal behaviors through the lens of shame and self-efficacy. The influence of leader feedback through shame on withdrawal behavior is noted at 0.1, while the impact of AI feedback through self-efficacy is slightly higher at 0.12. This difference highlights how feedback, whether inducing feelings of inadequacy or reducing self-confidence, can slightly increase withdrawal tendencies, with AI feedback having a marginally more substantial impact through reduced self-efficacy.

Overall, the study suggests that while both AI and human leaders can have significant emotional and cognitive effects on employees, AI feedback tends to be perceived as less emotionally impactful and slightly more detrimental to self-efficacy. Organizations using AI to collect feedback should balance fairness with empathy to lessen negative impacts on employee emotions and productivity.

Enhancing Model Accuracy

Using user feedback can improve model accuracy by up to 30%, as shown in real-world examples like customer support AI.

Companies can greatly improve their AI models by gathering and studying user feedback in a structured manner. For instance, using structured surveys after chatbot interactions allows developers to identify areas of improvement.

Improving response time, user satisfaction ratings, and resolution rates can be achieved by fixing the issues pointed out in feedback. Tools like SurveyMonkey for surveys and Google Analytics for tracking behavior provide data that helps make regular updates, ensuring that AI systems continue to improve and meet user needs effectively.

Improving User Experience

Implementing user feedback in AI systems can increase user satisfaction rates by 25%, particularly in customer service applications.

Using feedback from users helps AI systems learn and improve how they work. For instance, sentiment analysis tools can assess customer reactions through surveys and chat logs.

Tools like TextBlob and VADER examine the emotions in text data, offering important information. Regularly updating algorithms based on user feedback reduces mistakes, allowing AI to respond more accurately.

Organizing feedback in a clear way, like using Google Forms for user comments, can make this task easier, leading to ongoing progress and better customer loyalty.

Identifying Bias and Ethical Concerns

Regular feedback helps identify thinking errors in AI systems, resulting in more ethical and dependable results.

For instance, in facial recognition technologies, feedback loops have revealed racial and gender biases. Businesses such as IBM and Google have worked on fixing these biases by using a variety of image collections and asking users for suggestions on how to improve.

Implementing tools like Google’s What-If Tool allows developers to visualize model performance across different demographic groups. Regularly reviewing model outcomes with stakeholders can further help identify skewed results.

By using ethical AI guidelines and regular feedback methods, organizations can improve fairness and accountability in their systems.

Types of Feedback Mechanisms

There are two primary types of feedback systems in AI: those that involve human input and those that work independently. Each has its own purpose. For a deeper understanding of how these systems can be improved, you might find our article on Feedback for AI Systems: Importance and Improvement Techniques insightful.

Human-in-the-Loop Systems

Human-in-the-loop systems use human knowledge to improve and change AI results, making decisions better.

In healthcare, for example, these systems can be essential for identifying medical conditions. Clinicians use AI algorithms to analyze medical images, but final decisions rest with radiologists who interpret results, ensuring accuracy.

IBM Watson Health is a tool that shows how AI can work with doctors to tailor medical treatment for individuals. In drug development, researchers use computer-generated data, but humans need to check these results carefully before starting clinical trials.

Working together improves efficiency and leads to more dependable results in challenging situations.

Automated Feedback Loops

Systems that automatically gather performance information allow updates to AI systems immediately without human involvement.

For example, machine learning platforms like TensorFlow and PyTorch use these loops to improve how well models work.

By integrating monitoring tools like Prometheus, data can be collected and analyzed instantly. When metrics fall below preset thresholds, the system can automatically adjust parameters, enhancing learning rates and model accuracy.

Companies like Google and Facebook use these feedback systems to keep improving their algorithms, so that the user experience keeps getting better on its own without requiring manual oversight.

Techniques for Collecting Feedback

To improve AI development, different methods for gathering feedback are used, like user surveys and marking data. Understanding the importance and improvement techniques for AI feedback can significantly enhance these methods.

Surveys and User Interviews

Research shows that using structured interviews can increase user interaction data by over 40%.

To gather feedback quickly, use tools like Google Forms (free) for simple surveys or Typeform (starting at $35/month) to create a more engaging survey experience.

Begin by creating questions that allow users to express their feelings in detail. For a balanced approach, combine this with user interviews to dig deeper into specific issues, scheduling 30-minute discussions using platforms like Zoom.

This method increases your data collection and builds user confidence, resulting in better data analysis and improved product features.

Data Annotation and Labeling

Data annotation tools like Label Studio simplify collecting feedback, improving training datasets for more effective model results.

With Label Studio, users can easily upload their datasets and define specific labeling tasks. For instance, if you’re working on a sentiment analysis project, you can create labels like ‘positive,’ ‘negative,’ and ‘neutral.’

This tool can handle various kinds of data, like pictures, words, and sound, making it adaptable. By connecting it with well-known machine learning tools like TensorFlow and PyTorch, you can simplify and speed up the process of training models.

The collaborative features allow teams to work together in real time, ensuring high-quality annotations and rapid feedback loops.

Analyzing Feedback Data

Studying feedback data is important for knowing user opinions. There are two main ways to do this:

- Qualitative analysis

- Quantitative analysis

Qualitative vs. Quantitative Analysis

Qualitative analysis looks at how users feel, while quantitative analysis gives numerical data about performance.

Qualitative methods, like user interviews and focus groups, let us thoroughly investigate people’s opinions and reasons. For example, using tools like Zoom for video interviews lets you record detailed feedback.

In contrast, quantitative analysis often employs surveys with Likert scales to gather data from a larger audience, allowing for statistical analysis. Tools like Google Forms or SurveyMonkey can facilitate this process.

Combining both methods helps you understand better; qualitative details can add depth to the numbers, resulting in more informed choices.

Tools and Techniques for Analysis

Programs such as Tableau ($70/month) and Excel (starting at $6/month) are necessary for examining feedback data and drawing helpful conclusions.

Plus these options, consider using Google Data Studio (free) for creating interactive dashboards.

Each tool offers unique features:

- Tableau excels at visual analytics with its advanced data visualization options.

- Excel offers powerful spreadsheet functions and formulas for fast calculations.

- Google Data Studio allows collaborative report building, which is great for team environments.

Use methods like sentiment analysis with software such as MonkeyLearn, which starts at $299/month, to understand audience feelings and improve the feedback you receive.

Implementing Feedback for Improvement

Using feedback is important for repeated development steps, helping AI programs get better as time goes on.

Iterative Development Process

A repeated development process involves cycles where feedback is gathered, leading to continuous improvements in AI systems and their outcomes.

When building an AI model, early versions are thoroughly tested. For example, after the initial launch, developers might collect user feedback on how well the system understands language and its relevance to the situation.

This feedback is then analyzed using tools like Jupyter Notebook for data visualization, allowing teams to pinpoint specific weaknesses. With the information learned, changes are made to make algorithms easier to understand.

Each version is built on user feedback and adds new machine learning techniques to keep the model aligned with user needs.

Case Studies of Successful Feedback Integration

Case studies reveal that companies like Amazon have improved their AI systems by integrating user feedback, resulting in a 15% increase in customer satisfaction.

For instance, Amazon implemented a feature allowing users to provide feedback on AI recommendations. The data collected improved their algorithms, so the recommended products better fit what users liked.

Netflix looks at how viewers rate shows and what they watch to suggest content that matches their preferences.

Constantly changing keeps users interested and helps them stay longer. This shows how using customer feedback can create real business improvements.

Challenges in Feedback Implementation

While applying feedback provides many advantages, issues like data privacy worries and scaling problems need to be resolved. For an extensive analysis of these challenges, our comprehensive study on improving feedback systems offers valuable insights into overcoming these hurdles effectively.

Data Privacy Concerns

Data privacy issues can make it difficult to gather useful feedback, leading organizations to follow stricter ethical rules in AI use.

To handle these challenges, organizations should use best practices like removing any personally identifiable information (PII) from data before analyzing it.

Obtaining explicit consent from users before collecting feedback is essential under regulations like GDPR. Use privacy-friendly tools, such as Typeform and SurveyMonkey, which provide built-in compliance features.

Regularly audit your data collection processes to identify and mitigate potential risks. Working with a data privacy lawyer can improve your compliance work, helping you build trust with users and get more honest feedback.

Scalability Issues

Problems with scalability can reduce the usefulness of feedback cycles, stopping businesses from using user feedback to make better decisions.

To improve how feedback systems can adjust to different needs, think about using feedback levels. Start by categorizing feedback into urgent, important, and general comments.

Use tools like Trello or Asana to prioritize and manage responses effectively. Use tools like MonkeyLearn for automatic sentiment analysis to make managing feedback easier.

Update your feedback methods every three months to match what users want. This organized method improves response times and encourages ongoing progress within the organization.

Future Directions and Innovations

AI feedback systems will get better with self-improvement tools and new technologies that upgrade their functions. This approach has significant implications for the development of these systems-our article on Feedback for AI Systems: Importance and Improvement Techniques elaborates on practical methods for enhancing these capabilities.

AI Self-Improvement Mechanisms

AI systems use feedback from users to improve how well they perform on their own, showing a big advance in what AI can do.

Platforms like TensorFlow now use feedback systems that let machine-learning models change their algorithms based on user interactions.

Tools like Optimizely allow developers to test different versions of features, enabling quick updates based on existing information.

Systems that handle and produce human language, like those using OpenAI’s API, improve and give clearer responses the more they are used over time.

These systems show how using user feedback can make processes more efficient and increase user happiness and involvement.

Emerging Technologies in Feedback Systems

Emerging technologies such as real-time monitoring systems are revolutionizing the way feedback is collected and analyzed in AI applications.

These systems use different methods, like sentiment analysis tools such as MonkeyLearn and text analytics platforms like Lexalytics, allowing businesses to measure user responses quickly.

For example, adding real-time feedback systems helps teams quickly make decisions based on data, greatly improving user experience. Platforms like Qualtrics make it easy to gather and examine user feedback, turning it into practical information.

By using these technologies, companies can change their AI models to make interactions better, creating a more responsive and interesting setting.

Frequently Asked Questions

What is the importance of feedback for AI systems?

Feedback for AI systems is important because it helps improve how well the system works. It helps the system learn from previous errors and make needed changes to give more accurate results later on.

How does feedback benefit AI systems?

Feedback helps AI systems to learn and adjust to new situations and information. It helps find mistakes and prejudices, which improves making decisions and solving problems.

What are some techniques for improving feedback in AI systems?

Some techniques for improving feedback in AI systems include regular evaluation by experts, incorporating human feedback, and implementing advanced algorithms that can effectively analyze and process feedback data.

Can feedback be used to identify biases in AI systems?

Yes, feedback can help identify biases in AI systems. By analyzing feedback data, systems can detect patterns that may indicate biases and take necessary steps to mitigate them.

Why is it essential to continuously provide feedback for AI systems?

Continuous feedback is necessary for AI systems as it allows for continuous improvement and helps prevent the system from getting stuck in a rut. It keeps the system making accurate and effective decisions.

How can feedback improve how users interact with AI systems?

Feedback from users can be used to improve the overall user experience with AI systems. By observing how users use the system and what they like, AI systems can be made to offer a more customized and easy-to-use experience.