Feedback for AI Systems: Importance and Improvement Techniques

As artificial intelligence changes, using human feedback in AI systems is essential to improve how they work and reduce biases. Techniques such as reinforcement learning, which are often aided by platforms like Label Studio, enable large language models to effectively learn from user interactions. This article examines the importance of feedback in AI development and shares ideas on how to improve these technologies to match user needs. Learn how careful feedback can make AI better.

Key Takeaways:

- 1 Importance of Feedback in AI Systems

- 2 AI Feedback Effectiveness in Education

- 3 Types of Feedback Mechanisms

- 4 Techniques for Gathering Feedback

- 5 Implementing Feedback for Improvement

- 6 Challenges in Feedback Implementation

- 7 **Upcoming Paths in AI Feedback Systems**

- 8 Frequently Asked Questions

- 8.1 Why is feedback important for AI systems?

- 8.2 How can feedback improve the performance of AI systems?

- 8.3 What are some ways to gather feedback for AI systems?

- 8.4 How can feedback lead to more ethical AI systems?

- 8.5 What are some techniques to effectively give feedback to AI systems?

- 8.6 Can AI systems learn from both positive and negative feedback?

Definition and Overview

AI feedback systems use user interactions and observations to improve AI model training and performance metrics.

These systems typically rely on feedback loops, where user input influences model adjustments.

For instance, in a recommendation engine, if users consistently disregard certain suggestions, the system can recalibrate its algorithms. Tools like TensorFlow and PyTorch facilitate the integration of user data, enabling continuous learning.

Implementing A/B testing can also be instrumental; by comparing user responses to different AI model tweaks, developers can identify the most effective adjustments.

These feedback systems are essential for improving AI performance and making sure they meet user needs.

Significance in AI Development

Getting feedback in AI development strongly influences making decisions. It reduces mistakes in reasoning and improves the accuracy of models.

For example, OpenAI actively solicits user feedback through interfaces that allow users to rate AI responses and suggest improvements. This feedback loop informs updates to their models, ensuring they align better with user expectations.

By analyzing this data, companies can identify common errors and make targeted improvements, which may involve refining algorithms or expanding training datasets. Continuous updates improve tools and support collaboration, making users feel valued and resulting in improved AI applications.

Importance of Feedback in AI Systems

Feedback isn’t optional; it’s essential for improving model training and system performance, particularly in AI systems where continuous feedback can lead to significant advancements.

AI Feedback Effectiveness in Education

To understand the importance and techniques for enhancing feedback in AI, our detailed exploration of Feedback for AI Systems: Importance and Improvement Techniques provides valuable insights into optimizing AI models.

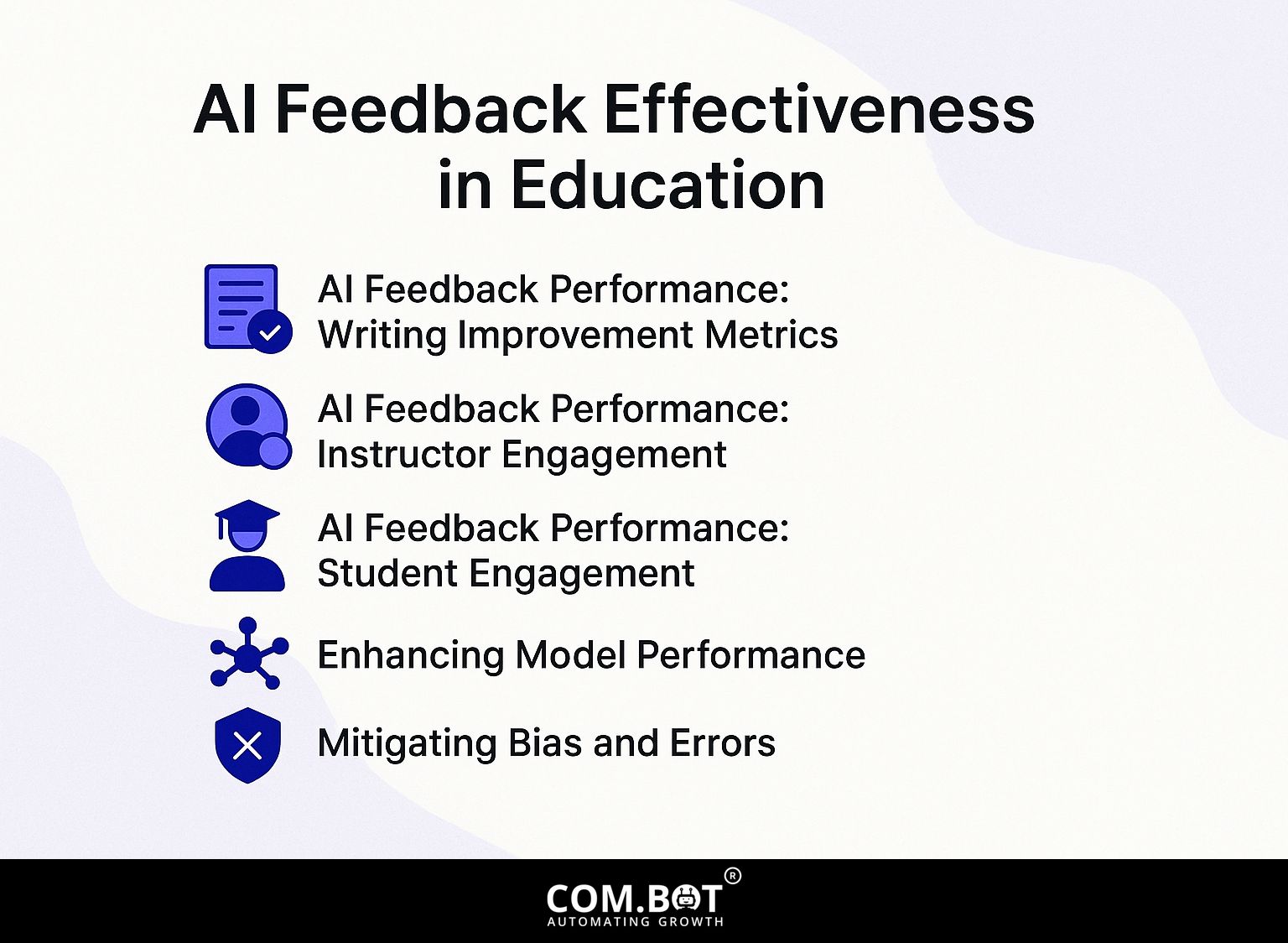

AI Feedback Effectiveness in Education

AI Feedback Performance: Writing Improvement Metrics

AI Feedback Performance: Instructor Engagement

AI Feedback Performance: Student Engagement

The data on AI Feedback Effectiveness in Education highlights the positive impact of AI tools on student writing skills, instructor engagement, and overall student satisfaction. Using AI in schools is leading to major changes, providing measurable improvements in important areas.

AI Feedback Performance data demonstrates substantial improvements in student writing abilities. The overall writing score improvement of 14.9% says that AI tools improve the quality of students’ writing. Notably, the organization score improvement of 31.1% indicates that AI feedback significantly aids students in structuring their writing more coherently. Similarly, the content score improvement of 19.1% reveals that AI aids in developing richer content, likely by providing detailed feedback and suggestions. The 7.0% improvement in language usage highlights AI’s role in refining students’ grammar and vocabulary. Additionally, the modest 7.7% improvement in writing motivation AI tools can help students enjoy writing more by making it less scary. They do this by giving feedback that matches each student’s needs.

In terms of Instructor Engagement, the data shows a 10.0% increase in uptake using AI tools. This suggests that teachers are using AI more often to improve how they teach and give better feedback. The 5.0% reduction in instructor talk time It also shows that AI tools help teachers move away from routine tasks, enabling them to concentrate more on individual interactions with students, improving classroom activities.

Student Engagement metrics reflect improved educational outcomes, with both student satisfaction and completion rates increasing by 10.0%. These improvements show that AI tools help improve academic results and also make learning more enjoyable and inspiring for students.

Overall, the statistics affirm that integrating AI feedback tools in education significantly benefits students and educators. Improving writing skills, boosting interest, and raising satisfaction and completion rates with AI tools creates a more efficient and fulfilling educational setting.

Enhancing Model Performance

Utilizing feedback effectively can reduce error rates in model predictions by up to 30%, showcasing its role in enhancing model performance.

In AI projects, feedback loops are critical. Google’s Search algorithm uses how people interact with it to improve search results.

By analyzing click-through rates, the system discerns what content is most relevant, leading to a 25% improvement in user satisfaction. In the same way, OpenAI has used user feedback from models like ChatGPT, which led to a 15% improvement in accuracy by fixing certain errors.

By regularly improving and adjusting based on clear user feedback, organizations can create a flexible learning setup that allows for steady improvements in model performance.

Mitigating Bias and Errors

Feedback processes are important for spotting and fixing biases, so AI systems run more fairly and correctly.

For instance, in facial recognition systems, organizations like ACLU have shown how user feedback can help fine-tune algorithms.

After the first rollout, users mentioned differences in accuracy among various demographic groups. By analyzing this feedback, developers adjusted training datasets to be more representative, leading to a reduction in error rates for marginalized groups.

Tools like TensorFlow provide features for ongoing learning, allowing models to change based on real-world input. This highlights the need for strong feedback systems.

Types of Feedback Mechanisms

Knowing different ways to give feedback helps improve AI models and make user interaction better. As mentioned, exploring the importance and techniques of feedback for AI systems can provide deeper insights into enhancing these processes.

Human-in-the-Loop Feedback

Including human feedback in the model training helps the AI better interpret detailed user inputs.

Companies like Amazon exemplify this concept by using customer interactions to improve their recommendation algorithms. When users rate products or give feedback, this information is fed back into the AI models, helping them improve their suggestions.

This works well in complicated choices, like tailoring shopping experiences to individual tastes that can change unexpectedly.

By using live user feedback, Amazon can keep evaluating and changing its AI, leading to a more suitable and interesting experience for users.

Automated Feedback Systems

Automated feedback systems use algorithms to gather and assess performance data immediately, allowing for rapid adjustments and improvements.

For instance, in autonomous vehicles, predictive monitoring systems continuously track factors such as speed, brake response, and environmental conditions.

These algorithms can spot differences, such as quick shifts in road conditions, and change driving actions on their own to improve safety.

Tools like TensorFlow and Apache Kafka facilitate the development of these systems, helping organizations process complex data streams effectively.

By using these technologies, companies can achieve better quality control and keep top performance in changing situations.

Techniques for Gathering Feedback

Collecting feedback effectively helps AI systems change and get better by using user experiences and performance data. Those curious about in-depth methods and strategies might appreciate our guide on feedback importance and improvement techniques for AI systems.

Surveys and User Testing

Surveys and user testing can give practical information, with research showing that well-designed surveys can raise user satisfaction scores by up to 25%.

To create useful surveys, start by setting your goals, like learning what users like or getting opinions on a particular feature.

Use platforms like SurveyMonkey or Google Forms for easy distribution and data collection. Create questions that use both multiple-choice for numbers and open-ended questions for detailed information.

Sample questions might include:

- “What features do you find most useful?”

- “What changes would make your experience better?”

Once collected, analyze responses using statistical tools or thematic analysis to identify trends and actionable items.

Performance Metrics Analysis

Analyzing performance metrics allows developers to identify anomalies and make data-driven adjustments to improve AI behavior.

To set up a system for analyzing metrics, begin by identifying important measures like response time, accuracy, and user satisfaction.

Use Google Analytics to set up conversion tracking for user interactions and events related to AI output.

Next, establish regular review intervals, like weekly or monthly, to assess these metrics. Tools like Tableau or Google Data Studio can visualize trends over time.

By comparing this information with stable times, developers can identify particular problems and determine how well their changes work.

Implementing Feedback for Improvement

Implementing feedback is not a single task; it involves ongoing changes and improvements to AI systems.

Iterative Training Processes

Training models repeatedly can improve their performance by up to 40% by using feedback from each cycle.

This approach involves regularly updating the model with new data and integrating user feedback.

For example, in machine translation, systems like Google Translate improve their algorithms by learning from user feedback and the surrounding text.

Another example is the recommendation systems used by Netflix, which adjust according to what you watch and your ratings.

To set up a repeated training process, plan to evaluate and change it every month to review performance numbers, making sure feedback is quickly included to improve the model.

Real-Time Adaptation

Real-time adjustments let AI systems react quickly to user interactions, improving involvement and satisfaction.

Services like Netflix update immediately to display content suited to each user’s viewing patterns.

Technologies such as cloud computing make it possible to easily access large amounts of data and processing power, letting systems analyze interactions quickly.

Tools such as Amazon Web Services (AWS) provide scalable environments where AI algorithms can process user data in milliseconds, ensuring timely responses that keep users engaged.

This combination makes using the product better and keeps users coming back more often.

Challenges in Feedback Implementation

While feedback is very helpful, using it can be difficult and needs to be handled carefully to be successful. For an extensive analysis of how feedback can be optimized, our deep dive into feedback for AI systems explores important improvement techniques.

Data Privacy Concerns

Concerns about data privacy can limit gathering useful feedback. However, 65% of users say they are willing to share their information if their privacy is protected.

Companies like Apple and Mozilla have set exemplary standards by using anonymized data and transparent practices. Apple’s App Tracking Transparency asks apps to get permission before tracking, which significantly increases user control.

Mozilla, however, has promised not to sell user data and builds trust through its policies that prioritize privacy. To handle data ethically, organizations should use clear consent forms, apply methods to anonymize data, and inform users about how their feedback will be used.

These steps can help find a good balance between gathering information and keeping users’ trust.

Scalability Issues

Problems with growing the system occur when feedback systems struggle to manage more user interactions, which can limit the AI’s ability to change.

To address these challenges, consider implementing cloud-based solutions like Amazon Web Services or Google Cloud, which offer elastic storage and computing capabilities.

Decoupling feedback processes can improve overall performance-separating data collection from processing allows each component to scale independently.

For example, using AWS Lambda to manage feedback submissions and a database like DynamoDB for storage can make the process faster and decrease delays.

This approach promotes flexibility, ensuring your feedback system remains effective as user interactions grow.

**Upcoming Paths in AI Feedback Systems**

AI feedback systems are set to completely change how models learn through new feedback methods and technologies. For those interested in delving deeper into this topic, one of our most insightful case studies explores the importance and improvement techniques of AI feedback systems, offering real-world applications.

Advancements in Feedback Algorithms

New changes in feedback algorithms can reduce AI training time by 50%, leading to faster and more accurate results.

For example, using reinforcement learning methods like Proximal Policy Optimization (PPO) helps models to adjust based on user interactions more easily. This helps AI systems improve by learning from both good and bad feedback, adjusting their results as they go.

Tools like TensorFlow and PyTorch make it easier to add these algorithms to current systems. Companies using these methods can greatly improve user satisfaction by providing answers that better match what users need, showing the useful benefits of these improvements in everyday situations.

Integration with Other Technologies

Combining feedback systems with technologies such as IoT and big data analytics can greatly improve AI abilities and reaction times.

For instance, companies like Siemens have integrated IoT sensors in their manufacturing processes. These sensors collect real-time data, which is then analyzed using AI algorithms to identify potential flaws or inefficiencies.

By using a feedback system that regularly updates the algorithms based on actual results, Siemens has increased efficiency by 20%. Netflix uses viewer input and large amounts of data to make suggestions that fit individual preferences, leading to more user interaction.

This integration improves performance and allows for early changes in how operations are managed.

Frequently Asked Questions

Why is feedback important for AI systems?

Feedback is important for AI systems because it makes them work better and more accurately. AI systems learn from the data they get, and without feedback, they cannot change and get better.

How can feedback improve the performance of AI systems?

Feedback allows AI systems to learn from their mistakes and make necessary adjustments. With feedback, AI systems can find patterns and make better predictions, resulting in better overall results.

What are some ways to gather feedback for AI systems?

There are various methods to gather feedback for AI systems, such as user surveys, user ratings, and user comments. AI systems can also use data from their own performance to self-evaluate and improve.

How can feedback lead to more ethical AI systems?

Feedback helps AI systems identify and correct biases in their algorithms. Getting input from different places helps AI systems make better choices and stop unfair or harmful results.

What are some techniques to effectively give feedback to AI systems?

One technique is to provide specific and detailed feedback, rather than general comments. It’s important to give feedback quickly so that AI systems can make needed changes right away.

Can AI systems learn from both positive and negative feedback?

Yes, AI systems can learn from both positive and negative feedback. Positive feedback allows them to continue behaviors that led to successful outcomes, while negative feedback helps them identify and correct mistakes.