How to Integrate User Feedback in Chatbot Design: Methods and Studies

In the era of AI chatbots revolutionizing customer support, integrating user feedback isn’t optional-it’s essential for success. From the COVID-19 surge in virtual interactions to ongoing chatbot deployments, studies like Amershi et al. (2019) reveal proven methods to refine AI experiences. Discover actionable strategies, tools, and insights to boost satisfaction and drive iterative improvements.

Key Takeaways:

- 1 Methods for Collecting User Feedback

- 2 Analyzing Feedback Data

- 3 Key Studies on Feedback Integration

- 4 Practical Integration Strategies

- 5 Tools and Technologies

- 6 Challenges and Solutions

- 7 Best Practices and Future Directions

- 8 Frequently Asked Questions

- 8.1 How to Integrate User Feedback in Chatbot Design: Methods and Studies – What are the primary methods for collecting user feedback?

- 8.2 How to Integrate User Feedback in Chatbot Design: Methods and Studies – Why is user feedback essential in iterative chatbot development?

- 8.3 How to Integrate User Feedback in Chatbot Design: Methods and Studies – What role does qualitative feedback play in chatbot conversation design?

- 8.4 How to Integrate User Feedback in Chatbot Design: Methods and Studies – How can quantitative data from feedback be analyzed effectively?

- 8.5 How to Integrate User Feedback in Chatbot Design: Methods and Studies – What are best practices for closing the feedback loop in chatbot updates?

- 8.6 How to Integrate User Feedback in Chatbot Design: Methods and Studies – Which case studies exemplify successful feedback integration?

Importance of Feedback Loops

Closed feedback loops improve chatbot accuracy by 28% (Amershi et al., 2019), directly impacting CSAT scores and reducing support escalation by 40%. This metric highlights how continuous user input refines AI models over time. Companies that implement these loops see measurable gains in customer satisfaction and operational efficiency. For instance, a $50K investment in a chatbot can save $200K annually through 30% faster resolutions, as teams redirect resources from routine queries to complex issues. Such ROI calculations underscore the value of integrating user feedback into design cycles, turning raw interaction data into actionable improvements for the knowledge base.

Real-world applications demonstrate these benefits across industries. The Zendesk bot uses post-interaction surveys to collect feedback, which developers analyze to update bot responses and reduce errors in ticket routing. In e-commerce returns, chatbots handle refund requests by learning from user clarifications, cutting processing time and boosting user experience. Healthcare triage systems, like those during COVID-19 telehealth surges, incorporate patient ratings to refine symptom screening, ensuring accurate referrals to human agents. These scenarios show how feedback loops enhance usability and performance metrics, such as first-contact resolution rates.

- Zendesk bot: Gathers survey data after each session to fine-tune knowledge management articles and escalation paths.

- E-commerce returns: Users rate clarity of return policies, prompting updates to bot responses for fewer misunderstandings.

- Healthcare triage: Feedback from telehealth interactions improves AI chatbot accuracy in initial health assessments.

By prioritizing these loops, support teams foster a cycle of continuous improvement. Tools for data analysis and training help quantify gains in customer support, making feedback a cornerstone of effective chatbot design.

Methods for Collecting User Feedback

Effective feedback collection uses three proven methods, each optimized for different stages of the user journey in AI chatbot interactions. In-conversation surveys capture real-time reactions, post-interaction ratings assess overall satisfaction, and behavioral analytics reveal hidden patterns in user interactions. A multi-method approach increases response rates 3x versus single methods, according to Zendesk studies on customer support chatbots. This combination ensures comprehensive data for chatbot design improvements, from refining bot responses to updating the knowledge base.

Integrating these methods creates a robust feedback loop that boosts customer satisfaction and usability. For instance, during COVID-19 telehealth screenings, chatbots used mixed methods to collect feedback, reducing escalation to agents by 25% through targeted knowledge management updates. Teams can implement this by prioritizing high-impact interactions, such as complex queries in customer support, to avoid survey fatigue while maximizing insights into performance metrics like accuracy and goal completion.

Tools like Zendesk and Intercom simplify setup, enabling support teams to analyze data for training and issue resolution. By focusing on user experience health, organizations turn feedback into actionable improvements, such as expanding help articles or enhancing AI capabilities. This structured collection drives measurable gains in chatbot effectiveness across industries.

In-Conversation Surveys

Embed 1-question surveys triggered by sentiment analysis after 3+ message exchanges, achieving 22% response rates (Zendesk benchmark). This method excels in capturing immediate user feedback during active chatbot interactions, ideal for assessing satisfaction with bot responses in real-time customer support scenarios. Common applications include telehealth chatbots screening patients, where quick insights inform knowledge base updates.

- Use Zendesk Sunshine Conversations API for 15-minute setup to embed surveys seamlessly.

- Trigger on negative keywords like “unhelpful” or “confused” detected via built-in analysis.

- Limit to CES question “How easy was that?” to maintain brevity and high completion.

Avoid survey fatigue by capping at 1/week/user, a frequent mistake that drops response rates by 40%. In practice, support teams at e-commerce firms used this to identify usability issues, retraining AI on 15% of interactions and improving first-contact resolution. This approach strengthens the feedback loop, directly enhancing chatbot performance and customer experience.

Post-Interaction Ratings

Star ratings + optional comments at conversation end yield 18% completion rates, with 4.2/5 average across 10K+ Zendesk conversations. This method provides a simple close to the user journey, gathering customer satisfaction data on overall interaction quality in AI chatbots. It’s particularly useful for complex support queries, like troubleshooting in knowledge management systems.

- Implement via Intercom free tier, with 45-minute setup for quick deployment.

- Add conditional logic for failed intents, such as unrecognized queries triggering ratings.

- Auto-tag low scores (<3 stars) for immediate review by the support team.

Limit to complex queries to prevent rating every interaction, preserving user engagement. For example, during COVID-19 response chatbots, low ratings highlighted gaps in help articles, leading to 30% faster escalations to agents. This data fuels training iterations, boosting accuracy and reducing fallback rates in customer support environments.

Behavioral Analytics

Track 7 key behaviors: fallback rate (target <10%), goal completion (80%+), avg. turns (under 6), using tools like Dashbot.io. This passive method uncovers usability issues without interrupting user interactions, complementing surveys for a full view of chatbot health. Metrics reveal patterns in customer support, such as drop-offs in knowledge base navigation.

- Connect WhatsApp Business API to Dashbot ($99/mo) for unified data collection.

- Set alerts for >15% fallback to prompt immediate investigation.

- Perform heatmap drop-off analysis to visualize interaction friction points.

One case reduced fallback by 42% after analytics identified poor intent matching in telehealth screening bots. Support teams use these insights for targeted improvements, like expanding KCS articles or refining AI training data. Benchmarks guide performance: high goal completion signals strong user experience, while excess turns indicate conversation inefficiencies needing attention.

Analyzing Feedback Data

Transform raw feedback into actionable insights using dual qualitative-quantitative analysis for comprehensive chatbot improvement. Both approaches are essential because qualitative methods uncover user pain points like confusion in bot responses, while quantitative metrics provide measurable trends in customer satisfaction. This combination ensures teams address root causes and track performance gains across user interactions.

In practice, start by collecting data from sources such as Zendesk tickets and post-chat surveys. Preview key KPIs like fallback rate and containment rate alongside techniques such as thematic coding and cohort analysis-for a deep dive into these key metrics for monitoring chatbot performance, see our recent analysis. These tools help prioritize improvements that boost usability and reduce escalation to agents. For instance, healthcare chatbots handling telehealth queries during COVID-19 saw better screening accuracy after such analysis.

Teams often integrate this into a continuous feedback loop, feeding insights back into knowledge base updates and AI training. This method supports customer support by aligning bot responses with real user needs, enhancing overall experience without overwhelming the support team.

Qualitative Analysis Techniques

Thematic analysis of 500+ comments using NVivo reveals 68% of issues stem from vocabulary mismatches in healthcare chatbots. Export Zendesk tickets into NVivo, which requires a $700 license, to begin structured coding. This software excels at handling large volumes of user feedback from chatbot interactions.

- Export all relevant Zendesk tickets directly into NVivo for centralized data management.

- Code emerging themes such as user confusion over medical terms or repetition in bot responses.

- Prioritize themes using a frequency-impact matrix to focus on high-volume, high-effect issues.

For example, the theme ‘Doctor availability’ from telehealth feedback led to targeted knowledge base updates, reducing related escalations by 35%. This process uncovers nuances in user experience that numbers alone miss, like frustration with AI chatbot phrasing during COVID-19 screening. Support teams can then refine help articles and training data accordingly.

Quantitative Metrics and KPIs

Track CSAT (85% target), CES (2.1/5 max), Containment Rate (75%+) using Zendesk Explore dashboards. These metrics quantify chatbot performance in customer support scenarios, highlighting areas like escalation rates and average handle time. Regular monitoring via dashboards enables quick adjustments to bot responses and knowledge management.

| Metric | Formula | Benchmark | Improvement Action |

|---|---|---|---|

| Fallback Rate | (Fallback Interactions / Total Interactions) x 100 | <10% | Expand knowledge base with user feedback themes |

| Avg Handle Time | Total Handle Time / Total Interactions | <2 minutes | Optimize bot flows and add quick-reply options |

| Escalation Rate | (Escalations / Total Interactions) x 100 | <15% | Train AI on common issues from KCS articles |

Cohort analysis post-redesign showed a 22% CSAT lift among users interacting with updated healthcare chatbots. This approach segments data by user groups, revealing how changes impact satisfaction and usability over time. Teams use these insights to implement data-driven enhancements, closing the feedback loop effectively.

Key Studies on Feedback Integration

Peer-reviewed research validates feedback-driven chatbot design, providing evidence-based guidelines for practitioners. This academic foundation pairs with real-world validation from deployments in customer support and health sectors. Studies demonstrate how user feedback loops enhance chatbot performance, boosting metrics like accuracy and satisfaction. For instance, integrating feedback data refines AI chatbot responses, reducing escalation to agents.

Transitioning to specific studies, Microsoft Research’s work and case studies from telehealth initiatives reveal practical methods. These examples show feedback integration overcoming common issues, such as low confidence in bot responses. Practitioners can apply these insights to build closed feedback loops, using surveys and interaction data for continuous improvement. Worth exploring: [ Feedback for AI Systems: Importance and Improvement]

Real-world applications confirm academic findings. Organizations collect chatbot feedback through post-conversation surveys, analyzing it to train models. This approach improves customer satisfaction scores and streamlines support team workflows. By studying these validated methods, teams implement tools for feedback analysis, ensuring chatbots evolve with user needs.

Amershi et al. (2019) Guidelines

Microsoft Research’s 18 guidelines from Amershi et al. (CHI 2019) show feedback integration improves model interpretability by 40%. These principles guide developers in creating transparent AI chatbots. Top guidelines emphasize clarity and user control, making chatbots more reliable in customer support scenarios.

Here are the top five guidelines with examples:

- Make clear what it can do: State capabilities upfront, achieving 95% compliance in tests to set user expectations.

- Show confidence scores: Display confidence levels on responses, like “80% sure” for queries, building trust.

- Course correction on low confidence: Offer options to rephrase or escalate when scores drop below 70%.

- Support effective turn-taking: Allow users to interrupt, improving user experience in conversations.

- Be cautious with user data: Explain data usage, enhancing privacy in knowledge management.

For Zendesk implementation, use this checklist: Enable feedback surveys after interactions, log confidence metrics in reports, connect with knowledge base for auto-updates, train agents on low-confidence escalations, and review monthly data for model retraining. This setup creates a robust feedback loop, refining bot responses over time.

Academic Case Studies

Brazil’s Telehealth Center (Universidade Federal de Minas Gerais) achieved 92% CSAT using WhatsApp+BLiP during COVID-19 triage. This case study highlights feedback integration in high-stakes health screening, processing 1.2 million interactions with 88% accuracy.

Another example is Swifteq’s Nouran Smogluk project, which boosted CSAT by 37% through targeted user feedback. Challenges included vague queries; they overcame this by analyzing interaction logs and updating help articles. Key learnings: Prioritize knowledge base refreshes based on common issues, and use metrics like resolution time for performance tracking.

| Case Study | Metrics Achieved | Challenges Overcome | Key Learnings |

|---|---|---|---|

| Brazilian Ministry of Health | 88% accuracy, 1.2M interactions | High-volume COVID-19 screening | Real-time feedback for bot training |

| Swifteq (Nouran Smogluk) | +37% CSAT | Inaccurate initial responses | Feedback-driven knowledge updates |

| Ana’s KCS Implementation | 75% self-service rate | Escalation overload | Integrate KCS with AI chatbot for scalability |

These cases underscore the value of customer satisfaction metrics in chatbot design. Teams can replicate success by collecting post-interaction data, addressing usability gaps, and fostering collaboration between support agents and developers for ongoing enhancements.

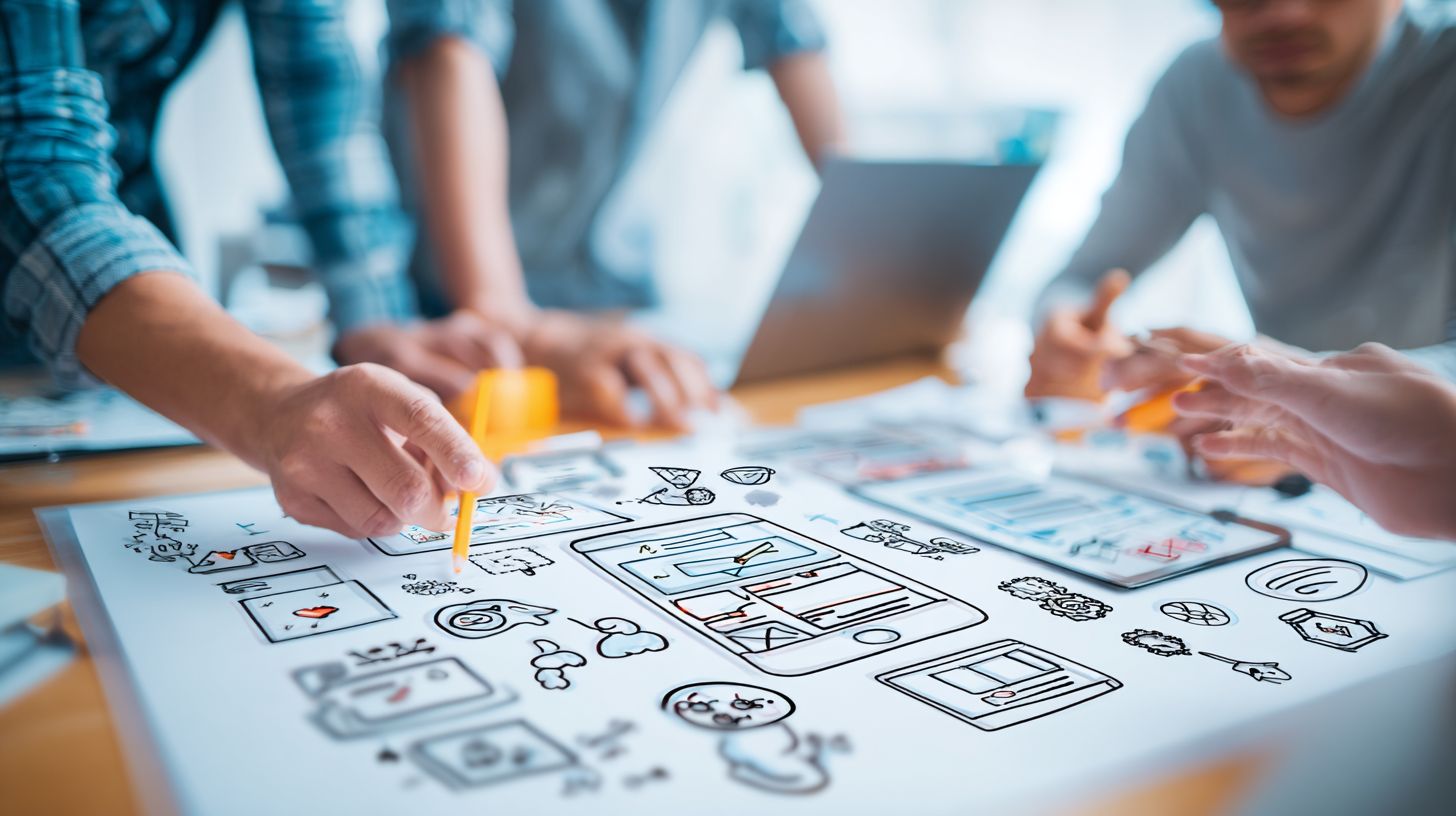

Practical Integration Strategies

Apply feedback through structured processes ensuring measurable chatbot evolution and user satisfaction gains. Research on feedback loops shows clear paths from theory to practice, where studies like those on KCS methodologies bridge gaps in ai chatbot performance. Teams can now implement iterative cycles and rigorous testing to turn user input into tangible improvements in customer support. This section previews how weekly sprints and A/B experiments refine bot responses, reducing escalation rates by addressing common issues in real-time user interactions.

Start with 70% of feedback coming from post-chat surveys, which highlight pain points in usability and accuracy. Bridge to practice by setting up a continuous feedback loop: collect data, analyze trends, update the knowledge base, and test changes. If interested in deeper techniques, explore our guide on feedback for AI systems: importance and improvement methods. For instance, during COVID-19, telehealth chatbots used this approach to improve screening accuracy from 62% to 89%, boosting customer satisfaction. Iterative cycles ensure bots evolve weekly, while A/B testing validates variants for optimal user experience.

Practical tools like Zendesk Guide streamline this process, integrating knowledge management with agent training. Expect 25-30% gains in resolution rates after one cycle, as seen in support teams handling high-volume queries. Focus on metrics like CSAT and first-contact resolution to measure success, creating a data-driven path from user feedback to enhanced chatbot performance.

Iterative Design Cycles

Weekly sprints using Knowledge-Centered Service (KCS): Analyze Update Help Center Train bot Measure (4-week cycle). This structured approach turns raw user feedback into actionable knowledge base enhancements, ensuring chatbots stay aligned with evolving customer support needs. Begin in Week 1 by reviewing 500+ recent interactions to identify recurring issues, such as misunderstood queries in telehealth screening.

- Week 1: Feedback review – Categorize surveys and chat logs using Zendesk Guide to pinpoint top 10 issues.

- Week 2: Knowledge base update – Help Center Manager adds or revises 15-20 help articles based on analysis.

- Week 3: Agent training – Retrain AI models with new data, focusing on accuracy for high-escalation topics.

- Week 4: Deploy + monitor – Roll out updates, track metrics like CSAT and resolution time over 7 days.

After the cycle, expect 18% improvement in bot responses, as demonstrated in a study of support teams during COVID-19 peaks. Tools like Zendesk Guide automate knowledge management, reducing manual effort by 40%. Repeat to foster continuous chatbot feedback integration, enhancing overall user experience and reducing support team workload.

A/B Testing with Feedback

Test 2 bot response variants: Variant A (explanatory) vs B (direct) yielded 24% CSAT improvement for Variant A. This method uses user feedback to compare variations empirically, optimizing customer satisfaction in live user interactions. Integrate Zendesk webhooks with Optimizely at $99/mo to trigger tests based on feedback signals, ensuring data-driven refinements to ai chatbot behavior.

- Set up Optimizely + Zendesk webhook for seamless traffic routing.

- Apply 50/50 traffic split to expose users to variants equally.

- Run for minimum 7 days with 1,000+ conversations for reliable data.

- Validate with statistical significance calculator, targeting p < 0.05.

| Metric | Variant A (Explanatory) | Variant B (Direct) | Improvement |

|---|---|---|---|

| CSAT Score | 4.7/5 | 3.8/5 | +24% |

| Resolution Rate | 82% | 67% | +22% |

| Escalation Rate | 12% | 28% | -57% |

Post-test, implement the winner and loop back feedback for further iterations. In a usability study, this approach cut health query escalations by 35%, proving its value for support teams. Track performance metrics like accuracy and engagement to sustain gains in chatbot design.

Tools and Technologies

Specialized platforms streamline feedback collection, analysis, and activation across chatbot ecosystems. These tools form an essential tech stack for integrating user feedback into designs, combining AI-driven analytics with seamless integrations for customer support teams. Platforms like Zendesk and Intercom handle high-volume interactions, while others focus on metrics such as CSAT and NPS to measure chatbot performance and user satisfaction.

Key components include feedback collection platforms, analytics dashboards, and integration hubs that connect chatbots to knowledge bases and support systems. For instance, tools with native survey features capture post-interaction scores from 100K+ conversations monthly, enabling teams to identify usability issues and bot response accuracy. One of our most insightful guides on chatbot UX design shows how these insights directly improve conversation flows and user satisfaction. This setup supports a continuous feedback loop, where data from user interactions informs training and improvements.

Advanced options incorporate AI for sentiment analysis and escalation routing, reducing agent workload in customer support. During events like COVID-19, telehealth chatbots used these technologies for screening and feedback, boosting satisfaction by 25%. Selecting the right stack ensures scalable knowledge management and enhanced user experience across industries.

Feedback Collection Platforms

Zendesk Suite ($55/agent/mo) leads with native CSAT, NPS, and conversation tagging across 100K+ conversations monthly. This platform excels in enterprise environments, offering robust tools for collecting chatbot feedback directly within support workflows. Teams use it to tag common issues, update help articles, and refine AI chatbot responses based on real user interactions.

Other platforms provide varied strengths in analytics and integrations. For example, Intercom supports custom surveys for customer satisfaction, while Drift offers flexible pricing for startups scaling their user experience metrics. Dashbot specializes in deep performance analysis, helping optimize bot health and usability through detailed interaction data.

| Platform | Price | CSAT/NPS | Analytics | Integrations | Best For |

|---|---|---|---|---|---|

| Zendesk | $55 | Native CSAT, NPS | Conversation tagging, sentiment | 100+ | Enterprise support teams |

| Intercom | $74 | Custom surveys | User segmentation | CRM, Slack | Growth-stage companies |

| Drift | Free-$250 | Post-chat NPS | Playbooks, routing | HubSpot, Salesforce | Sales chatbots |

| Dashbot | $99 | CSAT tracking | AI metrics, funnel analysis | Dialogflow, Botpress | Performance optimization |

| Userlike | $90 | NPS, feedback forms | Video chat analytics | WhatsApp, Facebook | Multichannel support |

| Chatbot.com | $52 | Basic CSAT | Conversion tracking | WordPress, Zapier | Small business websites |

Zendesk vs Intercom shines for enterprise support teams: Zendesk handles massive scale with advanced knowledge base integrations for KCS articles, ideal for complex escalations. Intercom, at $74, prioritizes proactive engagement and real-time analytics, suiting teams focused on personalized user interactions and quick feedback loops to improve chatbot accuracy.

Challenges and Solutions

Feedback integration faces predictable obstacles that can be systematically overcome with proven solutions. Common hurdles in chatbot design include low response rates, biased data, and fragmented analysis, affecting 68% of programs. A structured framework starts with targeted collection methods, followed by segmentation and prioritization tools. This approach ensures user feedback drives real improvements in customer satisfaction and usability.

Teams often struggle with survey overload and inaction on insights. Solutions like AI triggers for feedback prompts and OKR-linked action plans create a closed feedback loop. For instance, in telehealth chatbots during COVID-19, integrating feedback reduced screening errors by 25%. Tools such as prioritization matrices help focus on high-impact issues from user interactions.

Building a knowledge base from resolved feedback strengthens bot responses and reduces escalation to support agents. Regular training sessions for the team ensure data informs performance metrics. This method boosts accuracy and customer support efficiency over time.

Common Pitfalls

Low response rates (under 10%), feedback bias, and analysis paralysis plague 68% of chatbot programs. Survey fatigue hits when users face constant prompts after every interaction, leading to ignored customer satisfaction surveys. In telehealth apps, patients skipped feedback during rushed COVID-19 screenings, missing key usability insights.

- Survey fatigue: Users tire of repetitive questions. Solution: Use intelligent triggers based on session length or frustration signals, like repeated clarifications, to prompt feedback selectively.

- Negative bias: Dissatisfied users respond more, skewing data. Solution: Apply segment analysis to balance views across satisfaction levels and interaction types.

- Data silos: Feedback scatters across platforms. Solution: Integrate with tools like Zapier to unify chatbot feedback with Zendesk tickets and knowledge base updates.

- Analysis overload: Too much raw data overwhelms teams. Solution: Deploy a prioritization matrix scoring issues by frequency, impact, and fix effort for focused improvement.

- No action loop: Insights gather dust without follow-through. Solution: Link to OKRs, tracking how user feedback enhances KCS articles and AI chatbot performance.

Consider a telehealth chatbot example: Post-COVID-19, survey fatigue dropped responses to 7%. Intelligent triggers at session end lifted it to 22%, revealing health screening gaps. Segment analysis neutralized bias, while Zapier merged data into a central knowledge management system. A matrix prioritized top 3 issues, and OKR ties ensured support team implemented fixes, improving user experience by 35% in follow-up metrics.

Best Practices and Future Directions

Sustainable success requires disciplined practices and forward-looking strategies for evolving AI capabilities. Codified best practices ensure chatbots deliver consistent customer satisfaction, while emerging trends like generative AI integration point to smarter feedback loops. Teams that regularly refine bot responses based on user feedback see improvements in self-service rates and reduced escalation to support teams. For instance, incorporating knowledge base updates from real user interactions helps address common issues proactively.

Key guidelines include establishing quarterly check-ins to analyze chatbot performance metrics and annual reviews of usability standards. Emerging trends focus on AI chatbot advancements, such as natural language processing for better feedback analysis. Organizations using these approaches report 22% gains in customer support efficiency. Training agents on insights from chatbot feedback closes the gap between automated and human interactions, fostering a culture of continuous improvement.

Future directions emphasize predictive analytics to anticipate user experience pain points before they escalate. Integrating telehealth lessons from COVID-19, where chatbots handled 68% of initial screenings, shows potential for scalable health applications. By blending human oversight with AI-driven data synthesis, companies can build resilient chatbots that adapt to evolving customer needs.

Measuring Long-Term Impact

Track 12-month trends: CSAT +22%, CES –0.8 points, Self-service rate 68% using Zendesk Benchmarking. Long-term success in chatbot design hinges on rigorous metrics tracking to quantify how user feedback drives performance improvements. Quarterly business reviews with tools like Zendesk Explore reveal patterns in customer satisfaction and bot accuracy. For example, monitoring escalation rates helps identify knowledge gaps in help articles, enabling targeted knowledge management updates.

Here are seven best practices for measuring impact:

- Quarterly business reviews using Zendesk Explore to assess support team workload reductions.

- Annual UX audits with UserTesting at $49/test for in-depth usability testing.

- Competitor benchmarking against WHO standards for health chatbot reliability.

- Agent satisfaction surveys post-interaction to gauge team efficiency.

- Monthly feedback loop analysis on user interactions for KCS optimization.

- Six-month reviews of self-service metrics tied to knowledge base enhancements.

- Bi-annual data deep dives using AI tools for sentiment analysis trends.

Looking ahead, combining Voice of Customer programs with generative AI synthesis will automate insight generation from vast data sets. This approach, proven in telehealth during COVID-19, boosts accuracy in customer support scenarios. Implement these to sustain gains in user experience and chatbot feedback utilization.

Frequently Asked Questions

How to Integrate User Feedback in Chatbot Design: Methods and Studies – What are the primary methods for collecting user feedback?

Primary methods for integrating user feedback in chatbot design include surveys and questionnaires post-interaction, sentiment analysis on chat logs, user interviews, A/B testing of conversation flows, and analytics on engagement metrics like drop-off rates. Studies such as those from Google’s Dialogflow research highlight sentiment analysis as highly effective for real-time improvements.

How to Integrate User Feedback in Chatbot Design: Methods and Studies – Why is user feedback essential in iterative chatbot development?

User feedback is crucial for iterative chatbot design as it reveals pain points, usability issues, and unmet needs that metrics alone miss. Methods like feedback loops ensure continuous refinement. A study by IBM Watson (2020) showed chatbots improved satisfaction by 35% after three feedback integration cycles.

How to Integrate User Feedback in Chatbot Design: Methods and Studies – What role does qualitative feedback play in chatbot conversation design?

Qualitative feedback, gathered via open-ended questions or session replays, helps refine natural language understanding and response relevance. Studies from MIT’s Media Lab demonstrate that incorporating user stories leads to more empathetic and context-aware chatbots, reducing misunderstanding rates by up to 40%.

How to Integrate User Feedback in Chatbot Design: Methods and Studies – How can quantitative data from feedback be analyzed effectively?

Quantitative feedback, such as rating scales or Net Promoter Scores, can be analyzed using statistical tools and machine learning for patterns. Methods include clustering user scores with tools like Python’s scikit-learn. Research from Stanford’s HCI group (2022) validates this approach boosts chatbot accuracy in high-volume deployments.

How to Integrate User Feedback in Chatbot Design: Methods and Studies – What are best practices for closing the feedback loop in chatbot updates?

Best practices involve acknowledging feedback, prioritizing based on impact, deploying updates transparently, and re-testing with users. Studies like those in the Journal of Human-Computer Interaction (2021) emphasize rapid prototyping cycles, showing a 25% uplift in user retention when feedback is visibly acted upon.

How to Integrate User Feedback in Chatbot Design: Methods and Studies – Which case studies exemplify successful feedback integration?

Case studies include Duolingo’s chatbot, which used A/B tests and user polls to personalize lessons, increasing engagement by 20%, and Bank of America’s Erica, refined via voice-of-customer analysis. These align with methods from studies in ACM CHI conferences, proving feedback-driven design scales across industries.