Multimodal Chatbots: Features and Experience

You’re probably wondering what makes multimodal chatbots stand out from basic text ones. They handle text, images, voice, and more, with key features like seamless support across modalities. In this piece, we’ll break down their capabilities and the user experience you’ll get from them.

Key Takeaways:

- 1 Core Features

- 2 Advanced Multimodal Features

- 3 User Experience Design

- 4 Technical Architecture

- 5 Challenges and Limitations

- 6 Future Directions

- 7 Frequently Asked Questions

- 7.1 What are Multimodal Chatbots: Features and Experience?

- 7.2 What are the core features of Multimodal Chatbots?

- 7.3 How do Multimodal Chatbots improve user experience?

- 7.4 What technologies power Multimodal Chatbots: Features and Experience?

- 7.5 What are real-world applications of Multimodal Chatbots?

- 7.6 What challenges exist in Multimodal Chatbots: Features and Experience?

Core Features

Core features form the foundation of effective multimodal chatbots, enabling robust handling of essential interaction types. These capabilities process text, images, and voice as building blocks for comprehensive customer support. They allow seamless omnichannel experiences across chat, phone, and visual inputs.

Text processing handles natural language queries with context retention. Image recognition analyzes uploads for quick insights. Voice interaction supports real-time conversations with low latency.

Together, these features work together with tools like Amazon Bedrock and LLMs for intelligent responses. They ensure high-volume ticket management and smooth handoffs to human agents. For a deep dive into BizBot tools that enhance these management capabilities, explore advanced features for optimization. This setup drives cost-efficiency and customization in AI-driven service.

Text Processing Capabilities

Advanced text processing powers natural conversations through state-of-the-art language models like Claude V3 Sonnet. It includes sentiment analysis for customer emotion detection, multilingual support across many languages, and context retention for multi-turn talks. Intent classification ensures accurate query understanding.

Sentiment analysis detects frustration or satisfaction in messages. This guides agents on escalation needs. Multilingual support handles global customers without translation delays.

Context retention maintains conversation history for coherent replies. For integration, use this code snippet for sentiment analysis with Amazon Bedrock:

import boto3 bedrock = boto3.client('bedrock-runtime') response = bedrock.invoke_model( modelId='anthropic.claude-v2', body={'prompt': 'Analyze sentiment: ' + user_text} ) sentiment = response['result']These features enable no-code setup and low-maintenance operations in crescend.ai environments.

Image Recognition and Generation

Image capabilities transform customer service by analyzing uploaded photos and generating visual responses. Customers upload a Jeep Grand Cherokee SRT8 photo, and the bot identifies the model, extracts the VIN, and checks service history. This speeds up support queries.

Amazon Titan Text Embeddings convert images to text for processing:

- Embed image into vector database like OpenSearch.

- Match against car listings for details.

- Generate recommendations via RAG integration.

In car listings workflows, bots assess damage on a Volkswagen Beetle, match colors, and suggest accessories. Use Lambda and API Gateway for secure handling. This supports white-label customization and analytics.

Generation features create custom visuals, like repair diagrams, enhancing agent assist.

Voice and Audio Interaction

Voice interaction creates natural phone-like experiences with real-time speech recognition and synthesis. It follows a step-by-step workflow using Amazon services for smooth processing. This handles accents, noise cancellation, and emotion detection from tone.

The workflow includes:

- Amazon Transcribe converts speech-to-text with low latency.

- LLM like Claude processes the text via Bedrock.

- Amazon Polly synthesizes natural-sounding replies.

For high-volume ticket management, voice bots triage calls, detect urgency from tone, and route to agents. Noise cancellation ensures clarity in busy environments. Emotion detection flags upset callers for priority.

Integration with SageMaker and CloudFormation enables scalable, secure deployments. This multimodal approach boosts omnichannel support with minimal maintenance.

Advanced Multimodal Features

Advanced features extend beyond basics, handling complex inputs like video and documents for enterprise-grade applications. Premium solutions like Crescendo.ai stand out with sophisticated capabilities that support high-volume ticket management and omnichannel interactions. These tools integrate Amazon Bedrock and LLMs for seamless processing.

Enterprises benefit from multimodal chatbots that analyze diverse data types, enabling precise customer support. Features like sentiment analysis and multilingual handoffs enhance user experience. No-code setup simplifies deployment for car listings or insurance queries.

RAG architecture with OpenSearch Service ensures accurate responses from vector databases. Security and analytics track interactions for low-maintenance operations. White-label customization fits brand needs in agent assist scenarios.

Cost-efficiency comes from Lambda and CloudFormation integrations, reducing manual oversight. These capabilities transform basic chatbots into powerful AI agents for diagnostics and recommendations.

Video Analysis and Response

Video analysis enables chatbots to process customer-submitted footage for detailed diagnostics and recommendations. The process starts with frame extraction, followed by Amazon Rekognition analysis for object detection and scene understanding. An LLM then generates summaries and actionable insights.

Consider a use case with a Tesla Model 3 dashboard warning. The chatbot identifies the error code from video frames, diagnoses the issue, and suggests fixes like software updates or service visits. This supports quick resolutions in customer service.

Processing typically takes 3-5 seconds with low latency, thanks to SageMaker and Titan embeddings. Integration via API Gateway allows real-time responses in multimodal flows. Enterprises use this for high-volume support in automotive sectors.

No-code setup via Crescendo.ai simplifies configuration. Features include multilingual support and sentiment analysis for better engagement. Handels to human agents occur seamlessly if needed.

Document and Data Processing

Document processing extracts insights from PDFs, invoices, and contracts using RAG architecture. Files upload to Amazon S3 for secure storage, then OpenSearch Service indexes content for fast retrieval. Claude V3 Sonnet handles extraction and summarization.

For car purchase contracts or insurance documents, the workflow parses terms, highlights key clauses, and flags discrepancies. A customer uploads a vehicle lease agreement, and the chatbot summarizes payments, fees, and obligations. This aids decision-making in real time.

No-code setup involves these steps:

- Configure S3 bucket via console for uploads.

- Set up OpenSearch indexing with Lambda triggers.

- Integrate Claude Sonnet through Bedrock for analysis.

- Test with sample documents for accuracy.

Vector databases store embeddings for precise queries. Features like analytics and security ensure compliance in enterprise use. Low-maintenance operations support ticket management and omnichannel integration.

User Experience Design

Superior UX design makes multimodal chatbots feel intuitive and human-like across all interaction channels. It focuses on smooth transitions between text, voice, and images. This ensures omnichannel customer support remains consistent and efficient.

Design principles emphasize context retention during modality switches. Users expect the AI chatbot to remember prior inputs without repetition. Intelligent handling of session states prevents frustration in high-volume scenarios.

For example, a customer discussing car listings via text can seamlessly switch to voice for details. Amazon Bedrock powers these language models like Claude Sonnet or Titan embeddings. This creates a natural flow in ticket management.

Omnichannel integration with SMS, WhatsApp, and web chat enhances accessibility. Key chatbot UX design practices emphasize such seamless experiences across channels. No-code setup options via Lambda and CloudFormation simplify deployment. The result is cost-efficiency and low-maintenance customer support.

Seamless Modality Switching

Seamless modality switching allows users to transition between text, voice, and images without losing context. Session state stored in Amazon DynamoDB tracks interactions across channels. This supports omnichannel integration with phone, web, SMS, and WhatsApp.

Implementation uses API Gateway for routing inputs to the right LLMs. For senior customers, text-to-voice handoff activates automatically based on sentiment analysis. The chatbot maintains conversation flow during the switch.

Consider a user uploading an image of a car issue mid-voice call. The system processes it via RAG with OpenSearch Service for quick insights. Agent assist features from Crescend.ai ensure smooth handoffs.

This design reduces latency in multilingual support. White-label customization fits brand needs. Overall, it boosts user satisfaction in diverse scenarios like high-volume ticket management.

Contextual Awareness

Contextual awareness maintains conversation history and personalizes responses across sessions. A vector database like OpenSearch stores embeddings of past interactions. This enables the multimodal chatbot to recall details effortlessly.

For instance, a user says, “Remember that Jeep issue?” The system retrieves relevant history using Titan embeddings. SageMaker fine-tunes models for accurate context matching in customer support.

Privacy-compliant memory management deletes data after sessions or on request. Security features protect sensitive info during analytics. This balances personalization with compliance in artificial intelligence applications.

Integration with Bedrock and Lambda powers real-time sentiment analysis. Experts recommend testing with varied queries for robust performance. The outcome is intuitive experiences in omnichannel environments.

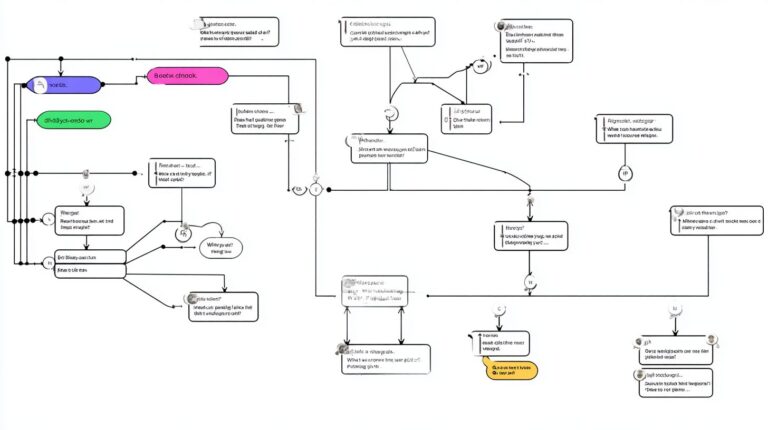

Technical Architecture

Crescendo.ai’s technical architecture leverages AWS services for scalable, secure multimodal chatbot deployment. The flow starts with Amazon API Gateway handling incoming requests from various channels. It then routes to Lambda functions for processing.

From Lambda, queries go to Bedrock powering Claude V3 Sonnet or Titan models for advanced language understanding. These LLMs work together with OpenSearch RAG for retrieval-augmented generation using vector embeddings. Results pull from DynamoDB for fast, reliable data storage.

No-code setup uses CloudFormation templates, enabling quick deployment without deep coding skills. Primary region is us-east-1, with us-west-2 for disaster recovery. This ensures high-volume support like car listings or ticket management.

Security meets SOC 2, GDPR, and HIPAA standards through encryption and access controls. Serverless Lambda scaling drives cost-efficiency by auto-adjusting to demand. Low latency supports real-time sentiment analysis and multilingual responses.

Challenges and Limitations

Despite advances, multimodal chatbots face technical hurdles that require thoughtful mitigation strategies. These systems process text, images, and video, but this complexity introduces delays and errors. Developers must address these issues for reliable customer support.

One key challenge is latency in video processing, where analyzing user-uploaded clips takes time on central servers. This slows down responses in real-time chats. Edge caching helps by storing processed data closer to users, reducing wait times.

Another issue involves hallucinations in multimodal responses, where AI generates inaccurate details from images or videos. Retrieval-Augmented Generation, or RAG, mitigates this by pulling verified data from sources like Amazon OpenSearch Service. This grounds outputs in facts for better accuracy.

High-volume scaling strains resources during peak traffic, such as omnichannel campaigns. Related insight: GOCC Smart Chatbot Case Study: Communication and Efficiency demonstrates how these challenges were overcome in practice. AWS Lambda auto-scaling dynamically adjusts compute power. Complex handoffs to human agents also demand seamless integration for smooth transitions.

Latency in Video Processing

Video inputs challenge multimodal chatbots due to heavy computation needs for features like object detection. Users expect quick replies, but cloud processing adds seconds or minutes. This disrupts fluid conversations in customer support.

Edge caching offers a practical fix by pre-processing and storing video metadata at edge locations. For example, a chatbot handling car listings can cache common analysis results. This cuts latency significantly without full re-computation.

Integrate with services like Amazon SageMaker for model inference at the edge. Combine this with API Gateway for efficient routing. Result: faster responses that maintain user engagement.

Teams using CloudFormation can deploy these setups quickly. Test with sample videos to fine-tune cache hit rates. This approach ensures low-maintenance performance even in high-volume scenarios.

Hallucinations in Multimodal Responses

Hallucinations occur when language models like Claude Sonnet or Titan invent details from ambiguous visuals. A chatbot might misdescribe an image in a support ticket. This erodes trust in AI agents.

RAG mitigation counters this by retrieving relevant context from a vector database before generation. Embeddings from user queries match against stored data in OpenSearch. Outputs stay factual and context-aware.

For multimodal use, pair LLMs with Bedrock for secure access to models. Analyze sentiment alongside visuals for nuanced replies. This setup supports multilingual interactions too.

Practical tip: Index domain-specific data like product catalogs in your RAG pipeline. Regularly update the knowledge base to handle evolving content. This builds reliable no-code chatbot features.

High-Volume Scaling

During traffic spikes, high-volume demands overwhelm multimodal chatbots. Features like sentiment analysis on images and text multiply resource use. Standard servers fail to keep up.

Lambda auto-scaling resolves this by spinning up functions on demand. Integrate with SageMaker endpoints for AI tasks. This ensures cost-efficiency and handles surges in ticket management.

Use analytics to monitor usage patterns. For omnichannel setups, route requests via API Gateway. White-label options allow customization without scaling worries.

Example: A support agent for car listings scales effortlessly during sales events. Deploy via CloudFormation for repeatable infrastructure. This maintains security and performance.

Complex Handoffs

Complex handoffs arise when chatbots escalate to humans, especially with multimodal data like videos. Sharing full context, such as embeddings or analysis, proves tricky. Poor transitions frustrate customers.

Solve this with structured integration using Amazon Bedrock agents. Pass conversation history, images, and RAG-retrieved facts seamlessly. Tools like <crescend,ai simplify this for assist workflows.</crescend,ai

Enable multilingual handoffs with model support for various languages. Include analytics summaries for agents. This speeds resolution in ticket management.

Test handoffs in simulations to refine prompts. Use low-maintenance setups with serverless components. Result: efficient collaboration between AI and humans.

Future Directions

The future of multimodal chatbots points toward fully agentic systems with real-time video and autonomous workflows. These advancements will enable chatbots to handle complex tasks like coordinating with multiple LLMs for seamless customer interactions. Experts recommend focusing on integrations that enhance car listings visualization.

Real-time video collaboration emerges as a key trend, allowing users to share live feeds during consultations. For instance, a customer could stream a video of their vehicle for instant sentiment analysis and advice from the chatbot. This feature reduces latency in high-volume ticket management.

AR/VR integration will transform car visualization, letting users virtually inspect vehicles through augmented overlays. Combined with agentic workflows, chatbots can chain language models like Claude Sonnet or Titan for detailed multilingual support. Crescendo.ai’s roadmap hints at these capabilities, promising no-code setup for such enhancements.

White-label customization opportunities will allow businesses to tailor these AI agents for omnichannel experiences. Integration with services like Amazon Bedrock, Lambda, and OpenSearch ensures cost-efficiency and low-maintenance deployment. This positions multimodal chatbots as central to future customer support.

Frequently Asked Questions

What are Multimodal Chatbots: Features and Experience?

Multimodal Chatbots: Features and Experience refer to advanced AI systems that process and respond to multiple input types like text, images, audio, and video, providing a richer, more intuitive user interaction. Key features include seamless integration of modalities, context-aware responses, and enhanced user experience through natural, human-like conversations across formats.

What are the core features of Multimodal Chatbots?

The core features of Multimodal Chatbots: Features and Experience encompass handling diverse inputs such as text for queries, images for visual analysis, voice for speech recognition, and even video for dynamic interactions. These enable capabilities like image captioning, visual question answering, and multimodal reasoning, delivering a superior, immersive experience.

How do Multimodal Chatbots improve user experience?

Multimodal Chatbots: Features and Experience significantly enhance user interactions by allowing natural communication channels, reducing friction from text-only limits. Users can upload photos for instant analysis, speak commands, or combine inputs, resulting in faster, more accurate responses and a more engaging, personalized experience.

What technologies power Multimodal Chatbots: Features and Experience?

Multimodal Chatbots: Features and Experience are powered by large multimodal models (LMMs) like GPT-4o or Gemini, combining transformers for text, vision encoders for images, and speech processors. These technologies fuse data streams for coherent outputs, enabling features such as real-time translation across modalities and context retention for fluid experiences.

What are real-world applications of Multimodal Chatbots?

Real-world applications of Multimodal Chatbots: Features and Experience include customer support with image troubleshooting, virtual assistants analyzing photos for shopping advice, educational tools explaining diagrams via voice and text, and healthcare bots interpreting medical images alongside patient queries, all boosting efficiency and user satisfaction.

What challenges exist in Multimodal Chatbots: Features and Experience?

Challenges in Multimodal Chatbots: Features and Experience involve data alignment across modalities, high computational demands, privacy concerns with multimedia inputs, and ensuring unbiased responses. Ongoing advancements in efficient models and ethical training are addressing these to maintain high-quality, reliable user experiences.